AI-powered ‘mind captioning’ tool turns brain activity into clear text

Scientists develop mind captioning, a technique that turns brain activity into text and may help people who cannot speak.

Edited by: Joseph Shavit

Edited by: Joseph Shavit

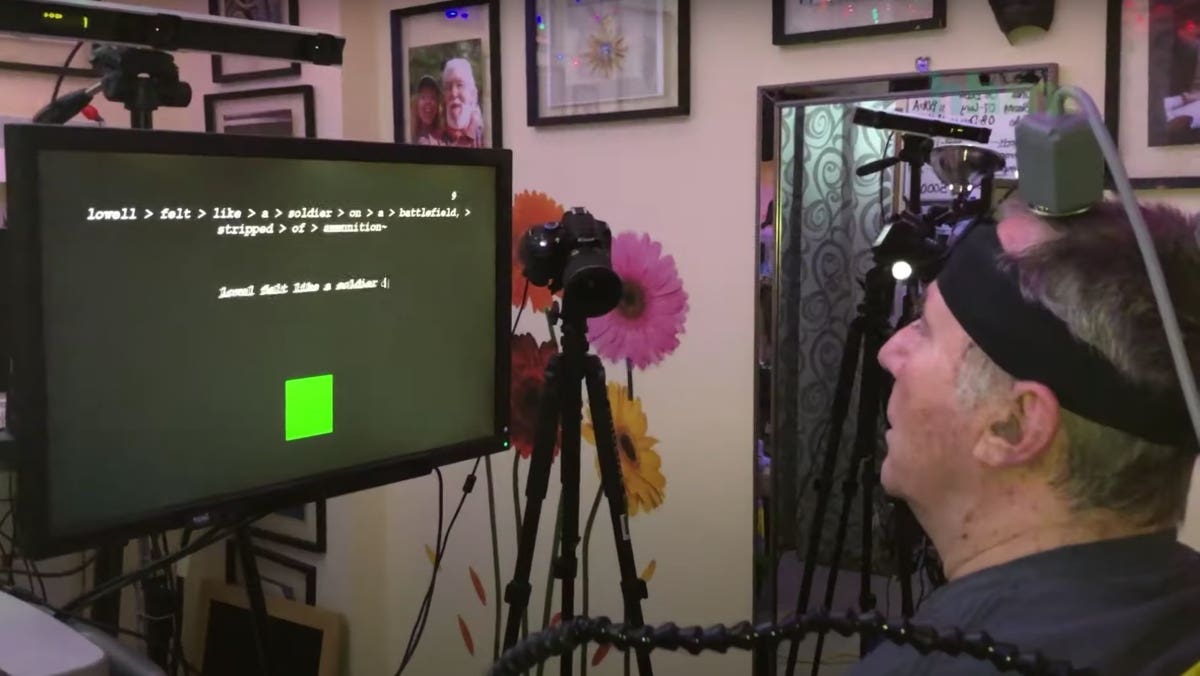

A new technique called mind captioning translates brain activity into text, offering hope for people with communication challenges. (CREDIT: BrainGate)

The idea that your thoughts could someday be written out on a page feels strange at first, especially if you know the weight of being unable to speak. For people living with conditions that limit communication, the silence can be as painful as the illness itself.

Now, a new study led by cognitive neuroscientist Tomoyasu Horikawa offers early evidence that this barrier might eventually be eased. The work describes a technique called mind captioning that turns patterns of brain activity into text. It does this by capturing the meaning inside mental images and translating it into natural, structured language.

The research marks a step beyond earlier decoding methods that could only identify small details like a single object or a spoken word. Instead, this approach targets full scenes, events, and relationships, similar to what you might picture when a memory suddenly surfaces or when a vivid moment flashes across your mind. It reads like science fiction, yet it reflects a careful mix of brain imaging and artificial intelligence.

How Thoughts Become Words

To build the system, the team asked volunteers to watch natural videos rich with movement and detail while undergoing functional MRI. These recordings captured activity related to objects, actions, and settings within each clip. The scientists then trained a model to match these patterns to semantic features drawn from a large language model. Those features help represent how words relate to each other, allowing the system to tie brain activity to text.

Instead of forcing the AI to invent full sentences at once, the method began with rough candidate phrases. Through repeated cycles of word swaps and adjustments, the text gradually aligned with the meaning found in the brain data. After many steps, the description grew coherent and accurate, often matching what participants later said they saw or remembered.

One of the most surprising discoveries came when the researchers removed the brain’s traditional language regions from the analysis. The system still produced strong descriptions. That result suggests that the meaning behind what you see lives in many areas of the cortex, not only in places long linked to speech.

What the Brain Shows About Meaning

By comparing how different regions respond, the team noticed a divide within visual processing areas. Rear portions of the brain held simpler elements, such as recognizing an object or identifying movement. Regions closer to known language centers combined these elements into more complete ideas, closer to full scenes or sentences. This pattern hints that the brain builds meaning in layers, moving from simple recognition to complex interpretation.

Yet the study also revealed limits. The captions used to train the model focused only on visible details. Because of that, the generated text rarely captured emotional tone or personal interpretation. Your inner sense of what a moment feels like remained hidden. The researchers say that adding participants’ own reports could help future systems recover those deeper layers.

Capturing Memories and Images in the Mind

After viewing the videos, volunteers were asked to imagine the clips again while inside the scanner. This was the tougher test. Mental images often shift quickly, and memories rarely replay with perfect clarity. Still, the system produced coherent descriptions that matched what participants later reported. In fact, the captions from this imagery period were more accurate than those produced during the earlier cueing period, when volunteers were still reading instructions.

Alex Huth, a computational neuroscientist at the University of California, Berkeley who was not part of the study, called the level of detail “surprising” and noted how difficult it is to decode such rich content from brain activity alone.

What the Tool Can and Cannot Do

Although the system clearly captured relational structure within scenes, there were challenges. Because the videos came from the internet, they were not controlled for unusual or contradictory actions. This makes it unclear how well the system could handle uncommon or impossible scenarios. Another complication came from the slow timing of the brain’s blood flow response, which may have blended some brain activity related to reading cues with activity from mental imagery.

Still, the decoder was trained on visual data rather than text-based tasks. This suggests that visual meaning drove the results more than language itself.

A Possible Lifeline for People Who Cannot Speak

The most hopeful part of this work lies in its potential for people living with severe communication barriers. The method does not rely on classic language networks, which often suffer damage after a stroke or other neurological injury. Instead, it taps into the brain’s richer visual and conceptual systems. That could one day allow a person with aphasia to share ideas through mental imagery that an AI system converts to text.

Yet the technology is far from ready for everyday use. It depends on MRI machines that are costly and slow, and it needs large amounts of personal training data. It also struggles to capture emotions or unusual scenes. But the study provides a proof of concept that once seemed unreachable.

It shows that your thoughts carry measurable patterns and that those patterns can be translated into language with surprising accuracy.

Research findings are available online in the journal Science Advances.

Related Stories

- Revolutionary brain implant converts thoughts to text with over 90% accuracy

- First-ever integrated microchip converts brain activity directly to text

- New brain-computer interface turns silent thoughts into words

Like these kind of feel good stories? Get The Brighter Side of News' newsletter.

Shy Cohen

Writer