AI revolution: Researchers teach everyday objects to sense, think, and move

AI meets design as researchers teach everyday objects to sense, think, and move, quietly assisting people in daily life.

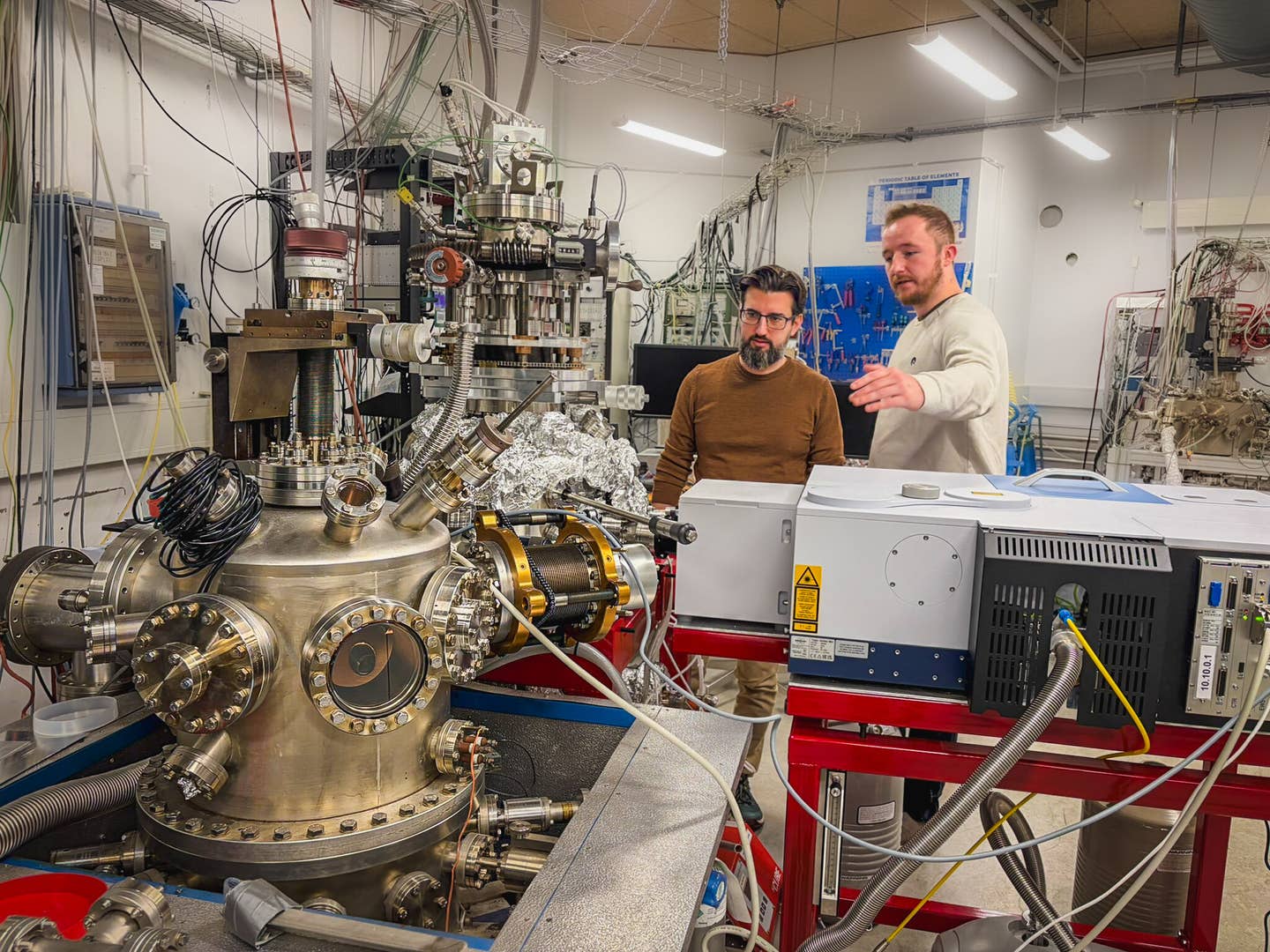

PhD student Violet Han, foreground, and Assistant Professor and Interactive Structures Lab Director Alexandra Ion with their wheeled robot prototype. (CREDIT: Carnegie Mellon University)

A stapler that slides towards your hand before you reach for it. A lamp that tilts forward when you start to read. A chair that quietly adjusts to ease your back. The scenarios sound like science fiction, but Carnegie Mellon University's Human-Computer Interaction Institute (HCII) researchers claim they will become routine in the near future.

Instead of building more robots, their answer is to make things that already exist learn, look ahead and help—unobtrusively. The paradigm, titled unobtrusive physical AI, is reshaping how artificial intelligence engages the physical world.

From Smart Devices to Intelligent Objects

Directed by assistant professor Alexandra Ion, the Interactive Structures Lab in HCII is merging robotics, large language models (LLMs) and computer vision to make objects that can think and move. Mundane objects like mugs, utensils or plates are mounted on tiny, wheeled bases that enable them to position themselves across surfaces.

The system begins with a ceiling-mounted camera sweeping the space, detecting people and objects in real-time. Those visual signals get transcribed into text descriptions—a language that the LLM understands. The model reads the scene, makes predictions on what a person might need next, and commands the objects within reach to help.

"The user doesn't have to tell the object to perform something," said Ion. "It understands what has to be done and does it automatically."

Doctoral student Violet Han, who works with Ion, added that the goal is to bring AI out of screens and into the real world. “We already have digital assistants,” Han said. “Now we’re focusing on AI that helps in the physical space, because users already trust the objects they interact with every day.”

The Philosophy of Unobtrusive Assistance

This book is one piece of an even larger ambition: invisible AI. Instead of clanking, whirring robots or helpful, chatty voice assistants, silent physical AI melts into the human background, delivering help that feels natural, not artificial.

A light that shines at nightfall, a shelf that lowers to within arm's reach, a desk that adjusts height as you stand up—each is an unobtrusive expression of smarts that react to situation, not command. The goal is to make living easier and more comfortable without bringing in appliances or monitors.

According to the study four of the pillars of design supporting these systems are invisibility, adaptability, safety and calm interaction.

The technology blends into the background, responding dynamically to human needs in subtle and secure ways. Instead of warnings or flashing lights, the objects move quietly and glide smoothly—a methodology aimed at creating comforting, supportive spaces instead of clashing, attention-grabbing ones.

From Reactive to Proactive

Most intelligent gadgets in the world today are reactive. They sit and wait for you to provide a command: "Turn on the lights" or "Play this song." Unobtrusive physical AI is the opposite—getting on with it before you ask it to.

Surfaces and objects could soon assist you intuitively — a countertop might shift ingredients aside as you cook, a door handle could open when your hands are full, and a shelf might reorder itself based on how often you use items. Those small, predictive gestures advance technology toward what scientists call "context-aware intelligence."

"The idea is to make it work automatically without being noticeable," Ion said. "If all is well, you shouldn't even notice it's there."

Engineering Intelligence Into Material

One of the most ambitious aims of the group is to engineer intelligence as a material property. Traditional robots are large, visible, and clanky. Stealthy AI hides its processes in everyday forms.

With advances in soft robotics, engineers are using shape-memory alloys, elastic polymers, and thin actuators that allow objects to bend, twist, or move without appearing robotic. A table could subtly push a book toward you. A wall panel could change its shape to direct airflow or improve acoustics.

This convergence of design and AI calls for collaboration between roboticists, material scientists, and industrial designers. Any object at present is a machine and furniture—aesthetically unremarkable, functional, and smart.

Applications in Daily Life

The potential uses span nearly every environment. In homes, invisible AI would help older homeowners walk safely or ease strain by rearranging furniture. At the office, chairs and desks would provide better posture throughout the day. In classrooms, flexible surfaces would automatically shape up for presentations or group work.

Researchers expect the systems to eventually help disabled people by reducing the number of spoken or manual requests. Instead of asking for help, users would find the world around them responds to what they're doing in a subtle way.

Ion captured one such image: coming home with shopping bags as a fold-down shelf projects from the wall where you set your bags down. "We desire technology that's transparent," she told an episode of the School of Computer Science's Does Compute podcast. "It needs to be so integrated into daily life that you don't even realize it's technology anymore."

Challenges Ahead

Of course, there are significant challenges to this vision. Embedding intelligence in objects raises questions about power use, data privacy, and trust. As these systems observe and learn from human behavior, privacy safeguards are essential. The researchers would prefer to maintain as much processing as possible local—in the device itself—to avoid round-the-clock cloud monitoring.

Energy efficiency is another problem. Many small actuators and sensors will consume very little power. Future generations will be built on low-power circuitry or energy harvesting with light, motion, or body heat.

And then there's the social side. Not everyone will accept furniture that moves around on its own. Ease of use will rely on whether such systems are found to be safe, predictable and actually useful—not creepy or intrusive.

A Three-Layer Framework

The authors propose a three-layer framework of perception, reasoning and actuation. Sensors observe the world. AI models infer context and intent. And actuation makes things occur in the physical world, whether that is motion, changes in lighting, or haptic feedback.

All of these components combined make the system less robotic and more co-operative partner-like. In the longer term, the objective is to create technology that subtly aids individuals without demanding their attention.

Ion's laboratory recently presented its work at the 2025 ACM Symposium on User Interface Software and Technology in Busan, South Korea, where it caused a lot of buzz among researchers developing new ways of integrating AI into the physical world.

Practical Implications of the Research

If this vision were realized, it could fundamentally change the way humans engage with technology overall. Instead of demanding your attention onto devices, AI would go into the background, easing life, making it safer and more convenient.

The elderly could be subtly physically aided without clunky controls. Employees could have adaptive workplaces that reduce fatigue. Children could study in classrooms that reconfigure themselves automatically for collaborative work.

In making intelligence an unobtrusive companion woven into the fabric of everyday experience, unobtrusive physical AI could quite possibly be the next step for humans in human-machine symbiosis.

Research findings are available to download online at the project website.

Related Stories

- Intelligent electronics enable human-like touch and feel in extended reality

- Nations race to train workers for the age of artificial intelligence

- Groundbreaking new AI can help prevent car crashes before they happen

Like these kind of feel good stories? Get The Brighter Side of News' newsletter.

Shy Cohen

Science & Technology Writer