Brain-inspired AI breakthrough: Machines learn to see smarter

Researchers created Lp-Convolution, a brain-inspired AI tool that improves image recognition and runs faster with fewer resources.

New AI tech mimics the brain to improve image recognition accuracy while cutting computing costs and boosting real-world performance. (CREDIT: CC BY-SA 4.0)

Machines have come a long way in seeing and understanding images, but they still don’t see quite like humans do. A new development in artificial intelligence (AI), however, may bring us closer to that goal. Researchers have created a method called Lp-Convolution, which helps machines focus on important parts of an image—similar to how the human brain works. This technique could make AI smarter, faster, and more efficient in real-world situations like driving, medicine, and robotics.

The team behind this breakthrough includes scientists from several global research centers, including the Institute for Basic Science. They aimed to fix one of AI’s biggest problems: how to process complex images with both speed and accuracy, while using fewer computing resources. Inspired by the way the human brain processes images, they designed a new approach that gives AI the ability to adjust its focus dynamically.

Why Traditional AI Falls Short

To understand how this new method works, it helps to know how current AI systems process images. Most image recognition tools use Convolutional Neural Networks (CNNs). These systems scan pictures with small, fixed square filters to find patterns. This method works well in many cases but can miss bigger picture details or more flexible shapes. It's like looking at a forest one leaf at a time and hoping to figure out the whole scene.

Another kind of AI, called Vision Transformers (ViTs), takes a different route. These systems look at the entire image at once, which gives them better results. However, they need huge amounts of computer power and large datasets to train. That makes them hard to use in everyday tasks like phone apps, security cameras, or medical tools that can’t afford supercomputers.

The brain, by contrast, doesn't work like a CNN or a ViT. It processes visual information through selective and flexible connections. Instead of scanning every detail or needing vast power, the brain quickly spots what's important in any scene—whether it’s a familiar face in a crowd or a ball flying toward your head.

The question researchers asked was simple: can a machine do the same?

Lp-Convolution: Filters That Flex Like the Brain

The answer came in the form of Lp-Convolution. This method changes how CNNs use filters. Instead of sticking with square shapes, it lets the filters stretch in different directions—horizontally, vertically, or any shape in between. This flexibility is based on a mathematical formula called the multivariate p-generalized normal distribution (MPND).

Related Stories

- Advanced AI cracks 500 year-old art mystery

- Breakthrough AI brings all Seven Wonders of the Ancient World back to life

- Alan Turing’s bold prediction comes true in the age of AI

The filters don’t stay the same across all images. They change depending on the task, just like how your brain pays more attention to certain parts of a scene. For example, when reading, you focus on lines of text. When playing sports, your eyes track motion. Lp-Convolution mimics this adaptability, solving a long-standing problem in AI called the “large kernel” issue.

In traditional CNNs, increasing filter size—say, using 7×7 filters instead of 3×3—often adds more data but doesn’t improve results. It also makes the system slower. Lp-Convolution avoids this by reshaping filters in smarter ways. It uses fewer resources while still improving accuracy.

Testing the Brain-Like Method

To prove that Lp-Convolution works, the team tested it on standard image sets, including CIFAR-100 and TinyImageNet. These are popular benchmarks in AI, used to test how well machines can recognize objects.

In these tests, Lp-Convolution beat older models like AlexNet and even performed better than advanced systems such as RepLKNet. What’s more, it showed strong results even when the images were blurry, noisy, or corrupted. That matters in the real world, where perfect images are rare.

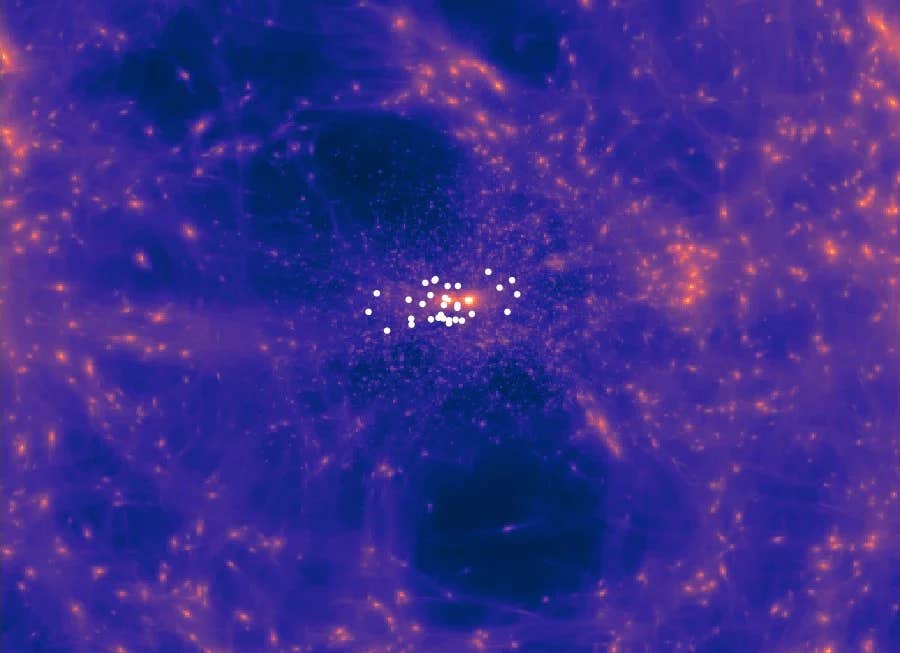

The researchers also found something surprising. When they examined how Lp-Convolution processed data, they noticed a pattern similar to activity in animal brains—specifically mouse brain signals. When the AI filters took on a shape close to a bell curve, or Gaussian distribution, its behavior mirrored the way biological neurons fire.

“We humans quickly spot what matters in a crowded scene,” said Dr. C. Justin Lee, one of the study’s leaders. “Our Lp-Convolution mimics this ability, allowing AI to flexibly focus on the most relevant parts of an image—just like the brain does.”

Real-World Uses: Safer Cars, Better Diagnosis, Smarter Robots

The benefits of this system reach far beyond lab tests. Since Lp-Convolution works faster and uses fewer resources, it can fit into everyday tech. For example, self-driving cars need to make split-second decisions. If their cameras can’t process an image fast enough, the results could be deadly. Lp-Convolution helps cars spot obstacles faster and more accurately.

In hospitals, doctors often use AI to study scans or X-rays. Traditional systems might miss faint signs of illness. Lp-Convolution can highlight tiny details, making it easier to catch diseases early.

Robots, too, stand to gain. They need to see and react in changing environments. Whether it's a robot sorting packages or one helping in a disaster zone, flexible vision makes a big difference. “This work is a powerful contribution to both AI and neuroscience,” said Dr. Lee. “By aligning AI more closely with the brain, we’ve unlocked new potential for CNNs, making them smarter, more adaptable, and more biologically realistic.”

What Comes Next for Lp-Convolution

The team plans to keep improving the technology. Their next step is to test Lp-Convolution in harder tasks, like solving visual puzzles or making decisions in real time. They hope to bring AI even closer to human-like thinking, without needing supercomputers or endless data.

Their work shows that copying the brain’s design can lead to better machines. Instead of relying on raw power, smarter structure wins. As Lp-Convolution proves, a flexible, focused system can see more by working less.

Research findings are available online here.

Note: The article above provided above by The Brighter Side of News.

Like these kind of feel good stories? Get The Brighter Side of News' newsletter.