New AI model revolutionizes medical imaging with 90% less computing power

Rice University researchers created MetaSeg, an AI framework that performs medical image segmentation using a fraction of the computing power.

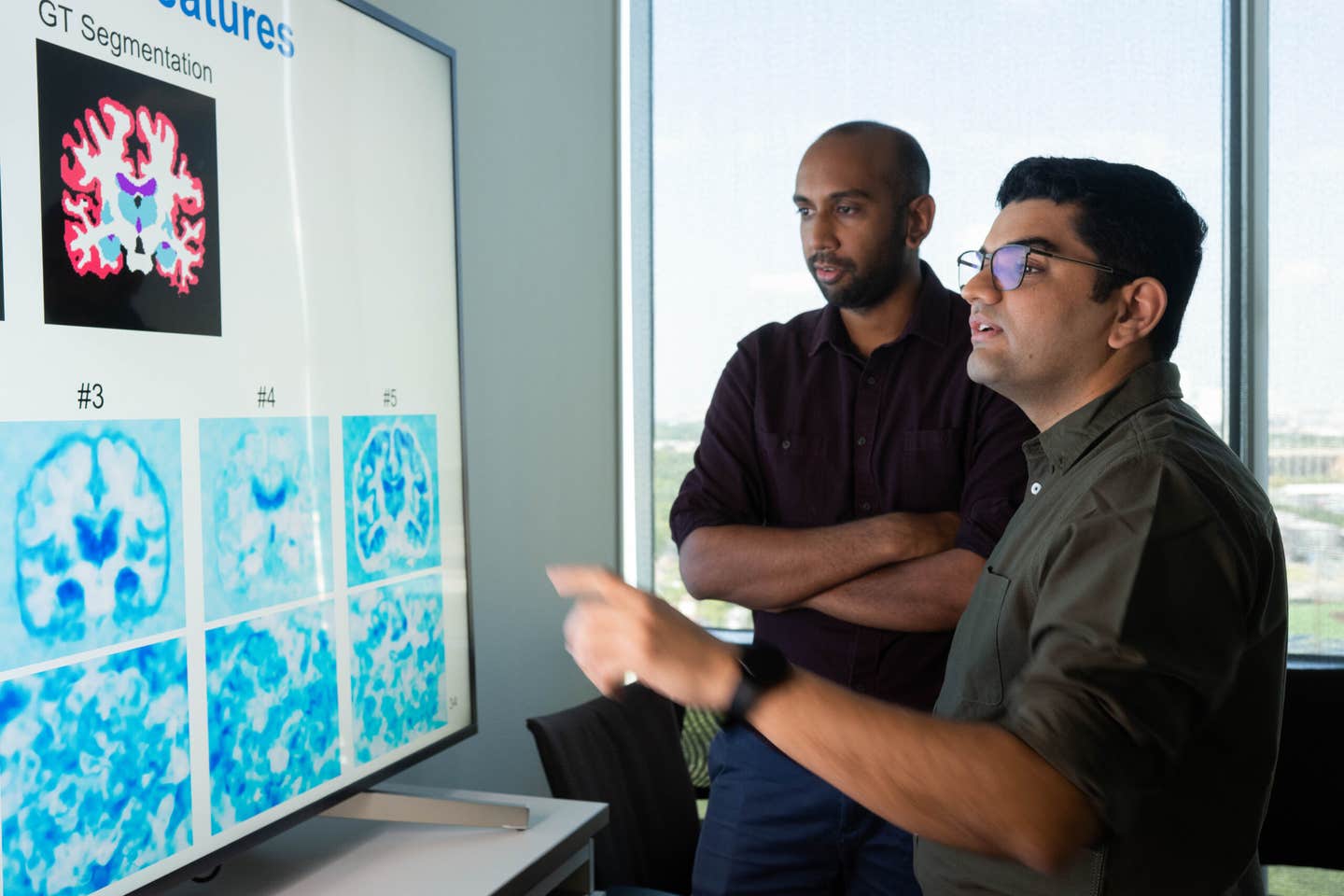

Guha Balakrishnan and Kushal Vyas created MetaSeg which matches U-Net’s MRI segmentation accuracy using 90% fewer parameters. (CREDIT: Jeff Fitlow/Rice University)

When doctors review brain scans, each detail of the picture—each pixel or voxel—must be painstakingly labeled. The cerebral cortex, hippocampus, ventricles, and other structures all need to be marked so that physicians can diagnose an illness, plan an operation, or monitor for disease. It's medical image segmentation, and for decades it's been a laborious, painstaking task.

This painstaking work was done manually for decades, until along came AI. Deep learning algorithms such as U-Nets in recent years have changed the process of clinicians marking up and interpreting scans. But even though U-Nets are excellent software, they need enormous datasets as well as super-powerful computers to use. For small research teams or hospitals, this can make costly AI-driven imaging out of their budget.

And now, researchers at Rice University may be able to get the job done. Their new system, MetaSeg, is as accurate as U-Nets but takes a fraction of the hardware—about 90% fewer parameters, their experiments indicate. The model, developed by Kushal Vyas, Ashok Veeraraghavan, and Guha Balakrishnan, is a combination of two leading-edge concepts in machine learning: implicit neural representations (INRs) and meta-learning.

A Less Complex, Smarter Path to Segmentation

Traditional models like U-Nets and vision transformers learn by processing huge labeled datasets. MetaSeg operates in a different way. It uses implicit neural representations, a mathematical process that operates on each image as a continuous signal instead of a grid of pixels. INRs can hold extremely fine details and compact information efficiently but up to now were not able to generalize past the one image with which they were trained.

The Rice researchers avoided that constraint by having INRs learn to "learn how to learn." MetaSeg includes meta-learning, a technique whereby AI models can learn quickly from new information. In essence, it learns from not just the images that it is trained on but also from the process of learning.

"Instead of employing U-Nets, MetaSeg employs implicit neural representations—a neural network framework that has heretofore not been discovered useful or explored for image segmentation," said Vyas, a Ph.D. student in electrical and computer engineering and paper lead author.

The model is made up of two main components: a reconstruction network that is employed to forecast pixel intensity values, and a segmentation head that labels those pixels with class labels. A model under training is told by an inner loop to fit a single image and an outer loop to tune the system so that it performs well for many.

By going through a few rounds of nested training, MetaSeg learns an initialization that allows it to adapt to a new scan in a few gradient updates. In practical terms, it is able to take in a new brain MRI, adapt in a few steps, and output a proper segmentation map—all without using annotated data at test time.

Performance that Punches Above Its Weight

To confirm the potential of MetaSeg, the researchers utilized the OASIS-MRI dataset with more than 400 brain MRI scans. The data was divided into training, validation, and test sets, and segmentation labels up to 35 brain regions. The researchers also compared the performance of MetaSeg against well-acknowledged baselines like U-Net for 2D scans and SegResNet for 3D segmentation.

The performance was staggering. For 2D MRI segmentation, MetaSeg achieved a Dice score—accuracy was typically judged by this—a 0.93 on just 83,000 parameters, compared to U-Net's 0.96 on 7.7 million parameters. For fine-grained 24-class segmentation, MetaSeg even surpassed U-Net by a hair, reaching a 0.86 on just 1 million parameters. In 3D MRI scans, it equaled SegResNet's 0.95 accuracy on tenth the number of parameters.

These results earned the paper, "Fit Pixels, Get Labels: Meta-learned Implicit Networks for Image Segmentation," the Best Paper Award at the Medical Image Computing and Computer Assisted Intervention (MICCAI) conference from among more than 1,000 accepted papers.

MetaSeg came to its optimal performance very quickly too. In two iterations, the model had a Dice measure of 0.85 that improved to 0.95 by 100 iterations. It is contrasted with its competitors which take thousands of iterations to come to similar precision.

Teaching AI to See Anatomy

To discover what MetaSeg learned, principal component analysis was employed by the researchers to project the model's internal features into a visual space. The results showed that MetaSeg had learned naturally to distinguish between significant anatomical regions such as the hippocampus, basal ganglia, and cerebral cortex.

Ablation tests—tests removing different parts of the model—showed that its performance relied on having both reconstruction and segmentation training happen at the same time. A model trained on images only scored 0.81 on the Dice score, but when both objectives were achieved simultaneously, the score improved to 0.93. That combined attention enables MetaSeg to recognize not only the appearance of structures, but also their meaning in the broader image context.

Challenges and Room to Grow

No model is perfect, and MetaSeg has some weaknesses. It did show some sensitivity to spatial misalignment—rotating or translating a scan could reduce performance by a few percentage points. The researchers believe adding more data augmentation during training could overcome that restriction. Still, the system was robust for most of the tests, handling even low-resolution scans with breathtaking accuracy.

Because INRs are images constantly, MetaSeg can interoperate without a hitch between pixels or voxels. That is, it can scale low-resolution information to high-quality segmentation maps, which could be useful in very particular ways within the clinical setting where scan quality can be inconsistent.

Democratizing Medical AI

Guha Balakrishnan, assistant professor of electrical and computer engineering and member of Rice's Ken Kennedy Institute, described how the new framework offers "a new, scalable way to the field of medical image segmentation that has been cornered for the last decade by U-Nets."

MetaSeg's efficiency facilitates its easy implementation in the cases where computational power is limited. Small clinics, rural hospitals, or research institutions with no access to high-end GPUs could use it in order to efficiently and precisely scan analysis. Its adaptive structure can also find its applications beyond medicine, pushing segmentation tasks in satellite imaging, autonomous vehicles, or industrial inspection.

The team's research is part of Rice University's growing digital health research culture, such as the Digital Health Initiative and the co-branded Rice-Houston Methodist Digital Health Institute. Balakrishnan and Veeraraghavan, chair of Rice's department of electrical and computer engineering, hope that MetaSeg's success means that medical AI doesn't need to be large and expensive to be effective.

A Step Toward Smarter, Leaner AI

In a field where bigger models get most of the headlines, MetaSeg has another vision—vision of smaller, leaner systems to deliver class-leading results. Its achievements also fly in the face of the notion that bigger networks and more data equal better results by default.

By looking at how AI learns rather than how much it learns, the Rice team demonstrated that performance and efficiency need not be at odds. MetaSeg's ability to learn quickly, even from unlabeled data, is a monumental improvement toward making medical imaging technology accessible in low-resource environments.

As lead author Kushal Vyas explained to the Brighter Side of News, "In this work, we introduced MetaSeg, an entirely new approach to image segmentation.".

Practical Applications of the Work

MetaSeg could transform the way physicians read scans by lowering cost and processing time. Because it is efficient with modest data and computer resources, small hospitals and research laboratories may use powerful diagnostic capacity without the expensive infrastructure.

The approach is also promising beyond medicine—its low footprint could be used in environmental monitoring, self-driving cars, or factory quality control.

Lastly, the integration of INRs and meta-learning by MetaSeg envisions a world where AI models are not only more capable but also sustainable and inclusive.

Research findings are available online in the journal Springer Nature Link.

Related Stories

- Learning AI tool is reshaping the future of medical imaging

- New brain imaging system links light, sound, and metabolism

- Faster, clearer, smarter: How AI is transforming cardiac imaging

Like these kind of feel good stories? Get The Brighter Side of News' newsletter.

Shy Cohen

Science & Technology Writer