New AI-powered armband uses gestures to control robots in real time

New UC San Diego armband uses AI and stretchable sensors to read gestures and control robots, even while you run or ride rough waves.

Edited By: Joseph Shavit

Edited By: Joseph Shavit

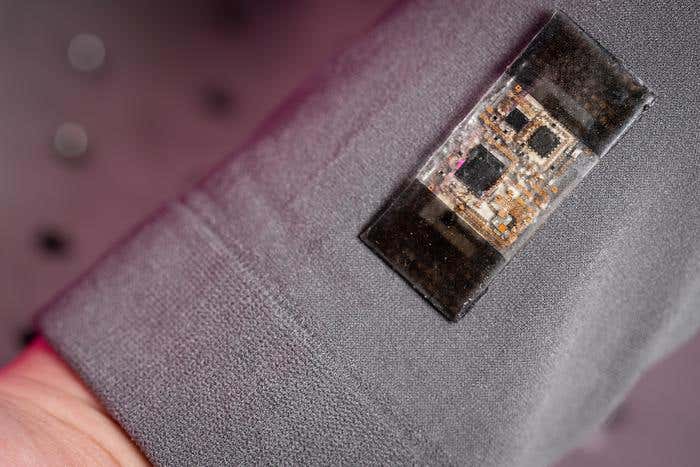

Engineers at UC San Diego have built a soft armband that combines stretchable sensors and deep learning so you can control machines with simple gestures, even while running, vibrating or moving on ocean waves. The system cleans noisy data in real time and turns everyday motion into reliable robot commands. (CREDIT: David Baillot/UC San Diego Jacobs School of Engineering)

A soft armband that lets you steer a robot while you sprint on a treadmill or bob on rough seas sounds like science fiction. Engineers at the University of California San Diego have now built something close to that reality, and it is designed to work with the way you actually move, not just when you stand still in a lab.

Their next generation wearable system, described in the journal Nature Sensors, uses stretchable electronics and artificial intelligence to read your gestures in real time, even when your whole body is shaking, bouncing or swaying.

Why Gestures Fail Once You Start Moving

Gesture controlled wearables already exist, but they usually work best in quiet conditions. If you sit at a desk and flex your wrist, the electronics can read that motion and send a clear signal. Once you start running, riding in a vehicle or working near heavy machinery, those signals get buried under motion noise.

Sensors pick up every jolt and vibration, not just the gesture you intend. That noise scrambles the pattern that software expects to see and the system either hesitates or makes mistakes. In the real world, where people rarely hold still, that problem turns many gesture systems into novelties rather than reliable tools.

Study co first author Xiangjun Chen, a postdoctoral researcher in UC San Diego’s Aiiso Yufeng Li Family Department of Chemical and Nano Engineering, put it simply: wearable gesture sensors work fine when a user is sitting still, but the signals fall apart under excessive motion.

The new system attacks that weakness head on. Instead of asking you to stay calm and steady, it assumes you will not, then cleans up the sensor data with a deep learning framework that was trained to expect chaos.

A Soft Patch Packed with Sensors and AI

The device itself looks modest. It is a soft electronic patch glued onto a cloth armband and wrapped around the upper forearm. Inside that small footprint, engineers stacked several layers: motion sensors, muscle sensors, a Bluetooth microcontroller and a stretchable battery.

The motion sensors, known as inertial units, track how your arm moves in space. The muscle sensors, similar to those used in electromyography, read subtle electrical activity when you flex or clench. Together, they capture both the motion of your arm and the effort in your muscles, which gives a richer picture of each gesture.

All of this data streams wirelessly to the system’s AI engine. There, a customized deep learning model processes the raw signals, strips away the background noise and decides which gesture you meant to make. That decision is converted into a command and sent in real time to a machine, such as a robotic arm.

The researchers did not train the model only on clean, laboratory gestures. They built a composite dataset that blended real gestures with layers of disturbance. Running, shaking, posture changes and even the swirling motion of ocean waves were all included so the network could learn to tell signal from noise in many situations.

Making Gesture Control Work In Real Life

Once the system was trained, the team asked volunteers to wear the armband and control a robotic arm while dealing with serious motion. In one test, subjects ran on a treadmill while the robot carried out tasks based on their arm movements. In another, high frequency vibration shook the wearer while the system still had to track their gestures.

The team also combined disturbances, such as running plus vibration, to push the system harder. Across these conditions, the armband recognized gestures accurately and sent low latency commands to the robotic arm. The robot responded smoothly, even though the human operator was in constant motion.

To see whether the device could survive true ocean style motion, the researchers moved testing to the Scripps Ocean Atmosphere Research Simulator at UC San Diego’s Scripps Institution of Oceanography. That facility can recreate both lab generated and real sea states. Inside this large simulator, waves rocked the test platform while the wearable tried to read gestures. The system continued to work, holding its performance under conditions far more violent than a calm office.

According to the authors, this is the first wearable human machine interface that shows reliable operation across such a wide range of motion disturbances. It does not ask the world to slow down first.

Who Could Benefit From Motion Tolerant Wearables

Because the system can handle messy motion, it opens doors for a long list of users. Someone going through rehabilitation after an injury might use natural arm gestures to steer a robotic aid or exoskeleton, even if they cannot sit perfectly still or perform small, precise finger movements.

People with limited mobility could gain easier control of assistive robots in daily life. Industrial workers and first responders, who often operate in loud, unstable, hazardous spaces, might use gesture control to direct tools or robotic partners while keeping their hands free.

The original idea grew out of military needs. The project was first inspired by the challenge of helping divers control underwater robots in turbulent water. As the work progressed, it became clear that motion noise is not a problem limited to the ocean. It affects almost every serious attempt to make gesture based wearables useful outside controlled labs.

In consumer tech, a motion tolerant interface could make gesture based control finally feel dependable. Whether you are on a train, moving through a crowd or working out, your smartwatch, augmented reality glasses or gaming setup could still read your commands instead of losing track when you move.

A New Blueprint For Smarter Wearable Sensors

The hardware itself is designed for daily use. The battery and circuits stretch with your arm, then return to shape without damage. Tests showed stable wireless signal strength and voltage even after repeated stretching cycles.

On the software side, the deep learning model does not stay fixed. The system can adapt to a new user with only a small amount of personal training data. With just a couple of sample gestures, it tunes its internal parameters so it better matches your unique movement patterns. That transfer learning step makes the system more practical, since it would not need hours of custom training every time a new person puts it on.

Chen sees the work as more than a single gadget. “This work establishes a new method for noise tolerance in wearable sensors,” he said. “It paves the way for next generation wearable systems that are not only stretchable and wireless, but also capable of learning from complex environments and individual users.”

The project, supported by the Defense Advanced Research Projects Agency, brought together the labs of Sheng Xu and Joseph Wang at UC San Diego. Their combined expertise in stretchable electronics, chemical sensing and robotics helped turn a fragile lab concept into a robust, wearable interface.

Practical Implications Of The Research

In the long term, this motion tolerant armband could reshape how you interact with machines. Reliable gesture control in real environments would make it easier to direct robots, drones or smart tools without reaching for buttons, joysticks or touchscreens. That freedom could help workers stay focused on tasks, patients stay engaged in rehabilitation and people with disabilities gain more natural control over assistive devices.

For researchers and engineers, the study offers a clear blueprint. It shows that you can combine stretchable hardware with AI trained on messy, real world data to overcome motion noise, rather than ignoring it. Future projects in health monitoring, sports tracking or virtual reality can build on this approach, using deep learning to clean up unstable signals instead of avoiding challenging conditions.

The idea of personalization through quick transfer learning also matters. It suggests that future wearables could arrive with a strong base model, then quickly adapt to your own movement style with only a few simple calibration gestures. That could lower barriers to adoption and make advanced interfaces feel more personal and trustworthy.

Overall, the work points toward a future in which gesture based control is not a fragile lab demo, but a dependable tool that fits into your daily life, even when that life is fast, noisy and full of motion.

Research findings are available online in the journal Nature Sensors.

Related Stories

- Apps and wearables are quietly shaping healthier habits in young people

- Real-time glucose and heart monitoring in a smart wearable device

- Soft robotic wearable could transform stroke and ALS recovery

Like these kind of feel good stories? Get The Brighter Side of News' newsletter.

Shy Cohen

Writer