New AI systems are teaching machines to verifiably function safely

AI learns to mathematically prove system stability, ensuring autonomous machines like cars and grids operate safely.

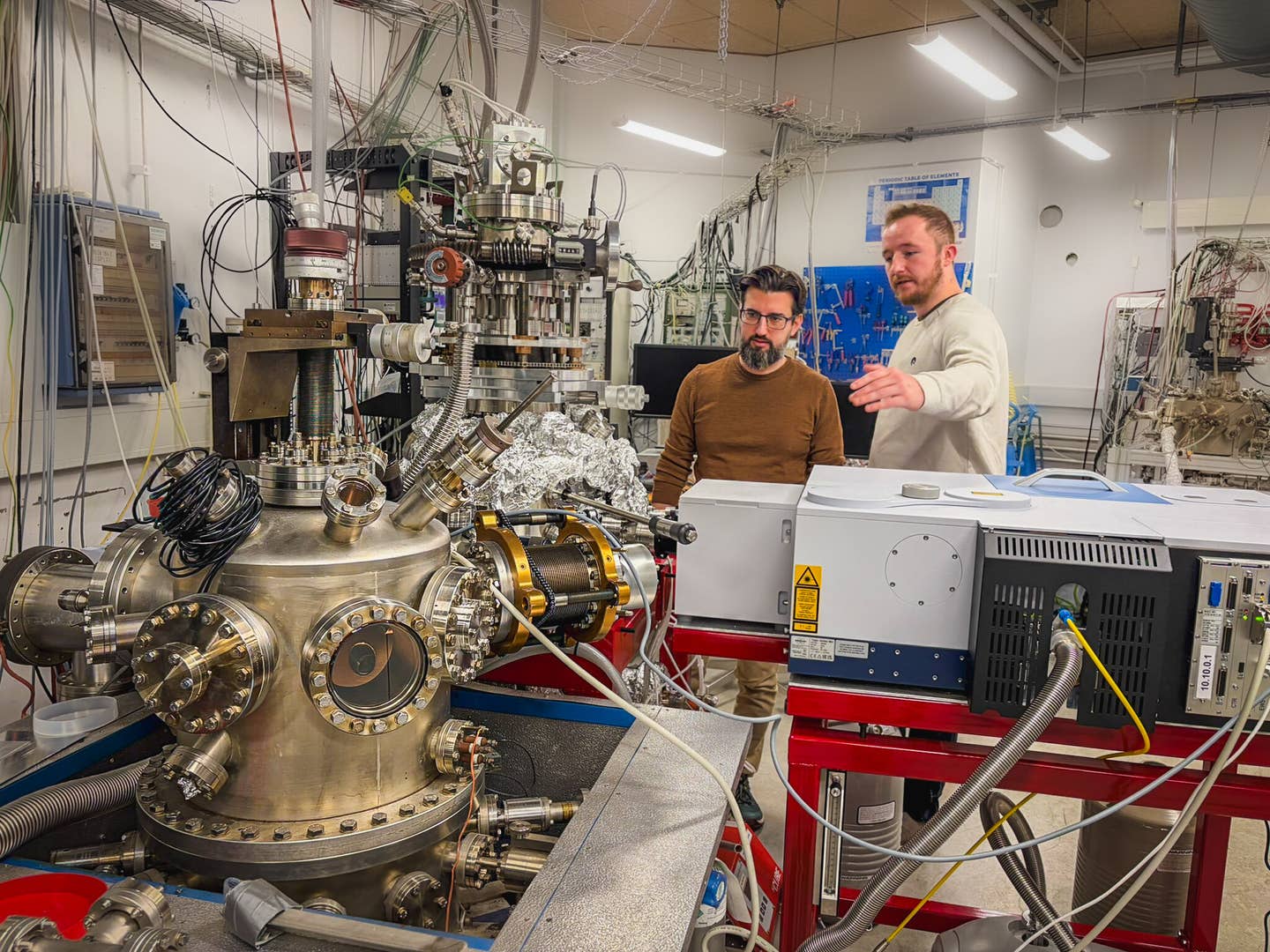

New study shows how AI can learn mathematical proofs of safety, combining deep learning with formal verification to guarantee that autonomous systems stay stable and under control. (CREDIT: University of Waterloo)

From industrial robots to self-driving cars, engineers face a common problem: keeping machines steady and predictable. When systems move, they can spiral out of control unless designed with mathematical proof of stability. That proof comes from what’s called a Lyapunov function, a sort of “energy measure” that shows whether a system will settle safely into equilibrium. The challenge? Finding one is extremely hard, especially for systems influenced by randomness or nonlinear forces.

A research team led by Dr. Jun Liu, professor of applied mathematics and Canada Research Chair in Hybrid Systems and Control at the University of Waterloo, has found a way to automate that process. By combining physics-informed neural networks—AI systems that learn from the laws of physics—with rigorous mathematical verification, Liu’s group created a framework that can both learn and prove the stability of complex systems.

“Any time you’re dealing with a dynamic system—something that changes over time, such as an autonomous vehicle or a power grid—you can model it mathematically,” Liu said. “Finding a function that guarantees stability, however, is often a notoriously difficult task.”

Turning Math Into a Learnable Problem

The team’s innovation lies in teaching AI how to find these Lyapunov functions. In control theory, stability can be expressed through a type of equation known as a partial differential equation. The researchers reformulated two of these—the Lyapunov and Zubov equations—so a neural network could learn them directly. If the network satisfies the equations, it has essentially discovered a mathematical proof that the system is stable.

The Zubov equation goes a step further, mapping not only whether a system is stable but also showing the full “safe zone” of operation, known as the domain of attraction. This region defines the conditions under which a machine can start up and still return to safety without spinning out of control.

Once the network learns these conditions, it generates a Lyapunov function automatically. The result is both a proof of stability and a visual map of where that stability holds—a critical tool for engineers designing safe autonomous systems.

Checking the AI’s Homework

But learning alone isn’t enough. Neural networks are flexible and powerful, yet they sometimes approximate solutions without truly proving them. To ensure accuracy, Liu’s team added a formal verification step. Using a mathematical reasoning system known as an SMT solver (satisfiability modulo theories), they verified that the neural network’s solution actually satisfied the strict inequalities needed for stability.

This two-step process—learning followed by proof—ensures the model’s reliability. The neural network first proposes a candidate Lyapunov function. Then, the SMT solver checks whether it remains positive everywhere except at equilibrium, and whether its rate of change is always negative as the system evolves. If both hold true, the function is certified as mathematically valid.

The hybrid method blends the strengths of human-designed mathematics with AI’s adaptive learning. It combines a simple, trusted local model for guaranteed stability near equilibrium with a neural network that covers broader, more complex regions where traditional math struggles.

Beyond Traditional Methods

Classical approaches like polynomial-based sum-of-squares techniques can only describe smooth, bowl-shaped energy surfaces. Real systems, however, often behave far less neatly. Their stability regions twist, bend, and fold—shapes that polynomials can’t capture well. Neural networks, trained on the Lyapunov and Zubov equations, can flex to fit those complex boundaries.

In benchmark tests, the AI-driven approach consistently outperformed conventional methods. For example, when tested on the Van der Pol oscillator—a classic model for nonlinear dynamics—the neural network captured a much larger and more accurate region of stability than traditional polynomial models. In power grid simulations, it performed even better, handling equations that standard techniques couldn’t.

Liu’s team extended their method to systems with up to 20 interacting parts by verifying smaller subsystems individually and combining them. That compositional strategy allows the technique to scale efficiently, offering a path to verifying stability in large, interconnected networks like smart grids or fleets of autonomous drones.

Building Trust in AI Systems

The idea of using one form of AI to verify another may seem odd, but Liu said it makes perfect sense. “Neural networks can learn mathematical proofs of safety, while logic-based AI systems verify that those proofs are correct,” he explained. “Both are tasks humans used to perform manually.”

The approach doesn’t aim to replace people. Instead, it automates the heavy computation required to analyze complex systems—tasks that would take humans months or years to complete. “No one is building factories run entirely by AI without oversight,” Liu said. “These systems help take over tedious or computation-intensive work so humans can focus on higher-level decisions.”

The team’s framework has already matched or exceeded existing control methods across multiple case studies. The researchers are now developing an open-source software package and exploring collaborations with industry partners to advance trustworthy AI for real-world engineering systems.

This work aligns with the University of Waterloo’s broader efforts to promote responsible AI through initiatives like the TRuST Scholarly Network and national programs for transparent technology development.

Practical Implications of the Research

The implications of this research reach far beyond theory. By merging deep learning with formal mathematics, engineers can design AI systems that are not only smart but provably safe. This could change how we build and certify everything from drones and self-driving vehicles to power grids and robotic surgery systems.

In the future, AI might not only control physical systems but also provide its own proof of safety before deployment. The combination of physics-based training and logical verification could lead to AI controllers that regulators, engineers, and the public can fully trust.

This approach may usher in a new era of dependable machine learning—one where systems that learn can also guarantee their reliability.

Research findings are available online in the journal Automatica.

Related Stories

- AI meets morality: Scientists are rethinking technology in 'smart cities'

- AI revolution: Researchers teach everyday objects to sense, think, and move

- Cutting-edge AI model aims to protect personal privacy and safety

Like these kind of feel good stories? Get The Brighter Side of News' newsletter.

Shy Cohen

Science & Technology Writer