New brain-computer interface turns silent thoughts into words

Scientists have taken a major step toward helping people “speak” without moving a muscle—by decoding the silent voice inside the mind.

Stanford researchers have successfully decoded imagined speech from brain activity, marking a leap forward in communication technology for people with paralysis. (CREDIT: Emory BrainGate Team)

Scientists at Stanford University have taken a major step toward helping people “speak” without moving a muscle—by decoding the silent voice inside the mind.

In a study published in the journal Cell, researchers reported they could interpret inner speech—the words you think but never say out loud—with accuracy rates of up to 74%. Their approach could open new possibilities for people who have lost the ability to talk due to conditions like ALS or brainstem strokes.

Lead author Erin Kunz says this is the first time scientists have been able to read brain activity specifically tied to imagined speech. “For people with severe speech and motor impairments, BCIs capable of decoding inner speech could help them communicate much more easily and more naturally,” she explains.

Moving Beyond Spoken Effort

Brain-computer interfaces, or BCIs, have already shown promise in helping people with paralysis operate devices like robotic arms or computer cursors. In recent years, BCIs have been trained to decode attempted speech, allowing users to type out words by engaging the brain’s speech-control regions—even if no actual sounds come out. This method is much faster than older assistive technologies, like systems that track eye movements, but still demands effort from users to try forming words.

The Stanford team wondered: what if you could skip that step entirely?

“If you just have to think about speech instead of actually trying to speak, it’s potentially easier and faster for people,” says co-first author Benyamin Meschede-Krasa.

Related Stories

- Revolutionary brain implant converts thoughts to text with over 90% accuracy

- Meta Unveils Mind-Reading AI That Types Your Thoughts with Shocking Precision

Unlocking the Language of Thought

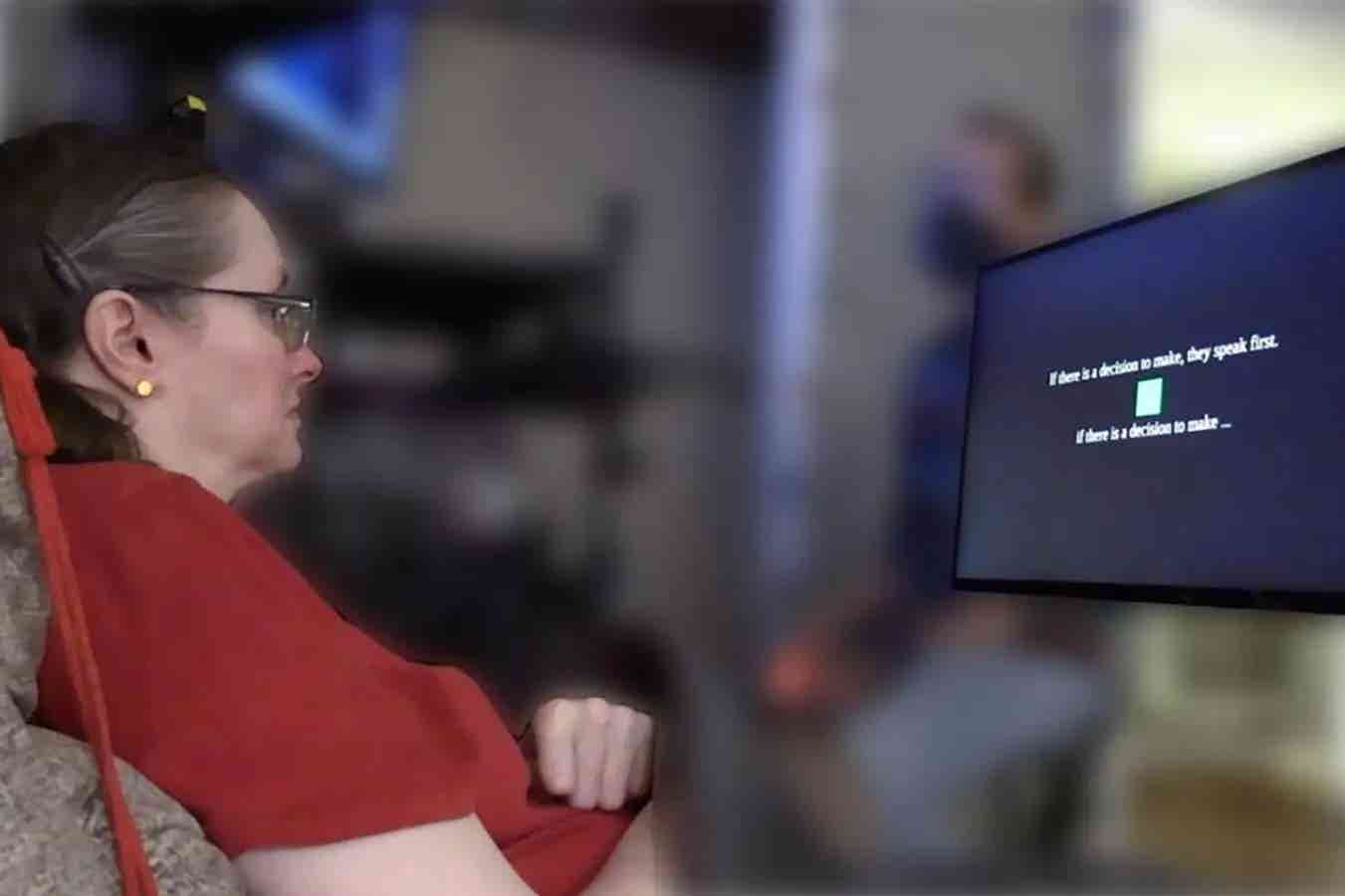

To test the idea, researchers recorded brain activity from four volunteers with severe paralysis, using microelectrodes implanted in the motor cortex—the part of the brain responsible for speech. Participants were asked to either imagine saying certain words or attempt to speak them.

They discovered that imagined and attempted speech activate many of the same brain regions and produce similar patterns of neural activity. The main difference? Inner speech signals are weaker in strength, but still distinct enough for AI models to recognize.

From this data, the team trained a computer system to interpret silent sentences in real time. Impressively, it could select words from a vocabulary of up to 125,000 possibilities with as much as 74% accuracy. In one example, participants counting pink circles on a screen generated brain patterns the system could read—even without being told to use inner speech for that task.

Privacy and Passwords for the Mind

The findings raise an important point: if technology can decode unspoken thoughts, could it accidentally capture things you never intended to share?

To address this, the researchers built in a “password” safeguard. The system would only begin translating inner speech when users silently said a chosen keyword—like “chitty chitty bang bang.” In testing, the password system worked with over 98% accuracy.

Senior author Frank Willett says that distinction is vital. In addition to training BCIs to intentionally decode inner speech, they can also be tuned to ignore it entirely when privacy is needed. “The future of BCIs is bright,” Willett adds. “This work gives real hope that speech BCIs can one day restore communication that is as fluent, natural, and comfortable as conversational speech.”

A Glimpse Into the Future of Communication

Right now, decoding free-form inner speech without mistakes is beyond current BCI capabilities. But with more advanced sensors and smarter algorithms, scientists believe that day may come. For people with paralysis, the potential is enormous—a conversation without vocal cords, muscles, or even the effort of trying to move.

As BCIs progress, they could not only restore voices but also change how we think about communication itself, blurring the lines between thought and speech in ways that once belonged to science fiction.

Past Studies and Findings

Earlier brain-computer interface research has shown that people with tetraplegia can use neural signals to control robotic arms, operate computer cursors, and even restore rapid communication through handwriting decoding. Recent advances have demonstrated speech neuroprostheses that allow people with ALS to hold open-ended conversations by decoding attempted speech.

Studies have also revealed that inner speech engages many of the same brain regions as spoken words, supporting its role in memory, reasoning, and reading comprehension. Neuroprosthetic experiments have decoded inner speech using electrocorticography, but findings have varied on which brain areas contribute most. Most recently, researchers showed that signals from the supramarginal gyrus can represent inner, spoken, and heard speech in shared ways.

Practical Implications of the Research

If refined, this technology could give people with severe speech impairments a faster, less physically demanding way to communicate. For someone who tires easily or cannot move their mouth muscles at all, speaking with only their mind could be life-changing.

The password-protection feature also sets an important precedent for safeguarding mental privacy as BCIs advance. By giving users control over when decoding begins, researchers are addressing one of the biggest ethical concerns in the field.

In the future, these breakthroughs could lead to wearable or minimally invasive devices that allow seamless communication anywhere—turning silent thoughts into spoken words in real time, without ever moving your lips.

Note: The article above provided above by The Brighter Side of News.

Like these kind of feel good stories? Get The Brighter Side of News' newsletter.