New soft armband uses AI to read gestures while you run

New UC San Diego wearable uses AI to read gestures through heavy motion, letting you control robots while running or on waves.

Edited By: Joseph Shavit

Edited By: Joseph Shavit

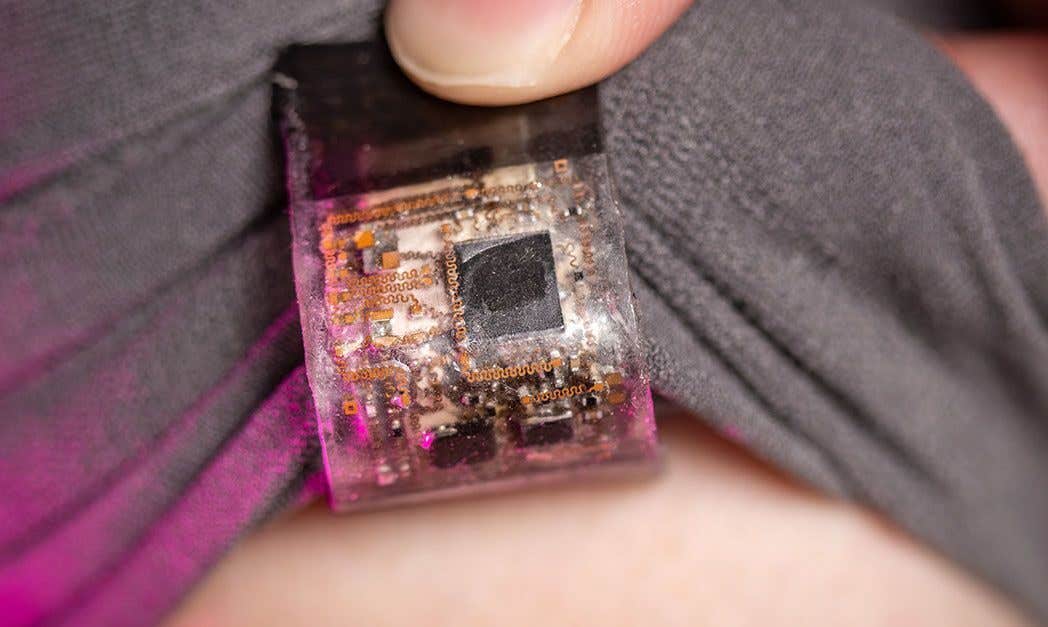

Engineers at UC San Diego have created a soft, AI powered armband that can read your arm gestures even during intense motion and use them to control machines in real time, a step toward human machine interfaces that work in the messy conditions of everyday life. (CREDIT: David Baillot/UC San Diego Jacobs School of Engineering)

A soft patch on the arm could soon let you steer robots with simple hand movements, even while your whole body is in motion. That is the promise of a new wearable system from engineers at the University of California San Diego, who set out to fix a problem that has held gesture control back for years.

Gesture based wearables usually work only in calm lab settings. The moment you start jogging, ride in a car over bumps or move through choppy water, the signal breaks apart. Real life motion drowns out the gestures your device is trying to read.

This new system uses stretchy electronics and artificial intelligence to filter that chaos in real time. It lets everyday gestures reliably control machines in situations that used to be “off limits” for wearables.

Why Gesture Control Often Fails in Real Life

Most existing gesture devices depend on clean signals from motion or muscle sensors. That works when you are sitting still. Once you start moving, the sensors pick up extra vibrations and jolts, and the device has a hard time telling a deliberate gesture from background noise.

“Wearable technologies with gesture sensors work fine when a user is sitting still, but the signals start to fall apart under excessive motion noise,” says Xiangjun Chen, a postdoctoral researcher in the Aiiso Yufeng Li Family Department of Chemical and Nano Engineering at UC San Diego and co first author of the study.

That noise problem has limited where gesture control can be useful. It has also made many devices feel frustrating or unreliable outside the lab. Chen and colleagues wanted a system that works with the way people actually move in daily life.

A Soft Patch Packed With Electronics

The team built a human machine interface around a soft electronic patch. The patch is glued onto a cloth armband that wraps around the upper arm, so it stretches and moves with your muscles.

Inside the patch, several pieces work together. Motion sensors track how the arm moves. Muscle sensors pick up electrical signals from your muscles when you flex or make a gesture. A tiny Bluetooth microcontroller gathers those signals and sends them to a computer. A stretchable battery powers the whole system while bending and flexing along with the patch.

"What makes this design different is not just the hardware. We paired the patch with a custom artificial intelligence model that acts like a smart filter. It learns to separate the gesture signal you care about from all the shaking and jostling that comes with movement," Chen shared with The Brighter Side of News.

Teaching the System to Ignore Chaos

To train that AI, the researchers created a large composite dataset. Volunteers wore the patch while they made specific gestures under many kinds of disturbance. They ran on a treadmill. They were exposed to strong vibrations. They moved through posture changes. They even faced motions like those generated by ocean waves.

Each recording contained both the intended gesture and layers of motion noise. The team fed these data into a deep learning framework that learns patterns over time. The model gradually learned which parts of the signal belonged to the gesture and which came from random motion.

Once trained, the system could take raw data from the arm, strip away interference and recognize the intended gesture in real time. It then translated that gesture into a command and sent it wirelessly to control a machine.

“This advancement brings us closer to intuitive and robust human machine interfaces that can be deployed in daily life,” Chen says.

Testing On Land, in Vehicles and Over Waves

To show that the device works in tough conditions, the team asked volunteers to use it to control a robotic arm. Participants guided the robot while running, while exposed to high frequency vibrations and under combinations of these disturbances.

The system also faced a harsher test at UC San Diego’s Scripps Institution of Oceanography. There, the team used the Scripps Ocean Atmosphere Research Simulator to recreate both lab generated and real sea motion. The patch rode through simulated waves while the wearer used gestures to command a machine.

In all of these settings, the interface delivered accurate control with low delay. The robotic arm responded smoothly to gestures even when the person’s whole body was being shaken. That level of reliability across such different disturbances is what makes this system stand out.

Who This Technology Could Help

The original idea came from a very specific need. The team wanted to help military divers control underwater robots without complex equipment or hand held controllers. Very quickly, they saw that the same obstacle appears almost everywhere in the wearable field. Motion noise hurts performance for workers, patients and consumers alike.

If this technology reaches the clinic, a person in rehabilitation could move a robotic aid with natural arm gestures, instead of tiny finger motions. Someone with limited dexterity could steer an assistive robot without fighting stiff buttons or joysticks.

Industrial workers or first responders could control tools or robots in noisy, high motion settings while keeping their hands free. Divers and remote operators could guide underwater vehicles in choppy water where other systems fail. For everyday gadgets, this kind of robust gesture reading could finally make wave to control interfaces feel dependable, not gimmicky.

“This work establishes a new method for noise tolerance in wearable sensors,” Chen says. “It paves the way for next generation wearable systems that are not only stretchable and wireless, but also capable of learning from complex environments and individual users.”

Practical Implications of the Research

This study shows that adding machine learning directly into a soft wearable can solve one of the biggest problems in gesture control: motion noise. That insight could influence many future designs in health care, industry and consumer electronics.

For medicine, it suggests a path to intuitive control of prosthetics, exoskeletons and rehab robots that follow your natural gestures even as you walk or exercise. That could give patients more independence and make therapy feel more natural.

In the workplace, noise tolerant gesture interfaces could help people guide robots or machines in loud, unstable or dangerous environments without fumbling for physical controls. That might make tasks safer and more efficient.

For researchers, the work offers a template for training AI on realistic, messy data, instead of ideal lab signals. That shift can help new wearable devices learn from the way people actually move and live, rather than from staged tests.

For society, systems like this hint at a future where you can interact with technology through simple, reliable body language, instead of screens and buttons. That could make digital tools feel more human and accessible, especially for people with limited fine motor skills.

Research findings are available online in the journal Nature Sensors.

Related Stories

- Apps and wearables are quietly shaping healthier habits in young people

- Real-time glucose and heart monitoring in a smart wearable device

- Soft robotic wearable could transform stroke and ALS recovery

Like these kind of feel good stories? Get The Brighter Side of News' newsletter.

Rebecca Shavit

Writer

Based in Los Angeles, Rebecca Shavit is a dedicated science and technology journalist who writes for The Brighter Side of News, an online publication committed to highlighting positive and transformative stories from around the world. Her reporting spans a wide range of topics, from cutting-edge medical breakthroughs to historical discoveries and innovations. With a keen ability to translate complex concepts into engaging and accessible stories, she makes science and innovation relatable to a broad audience.