Researchers build magnetic computer that thinks like a brain

Researchers create magnetic neuromorphic computers that learn like the human brain while using far less energy.

Edited By: Joseph Shavit

Edited By: Joseph Shavit

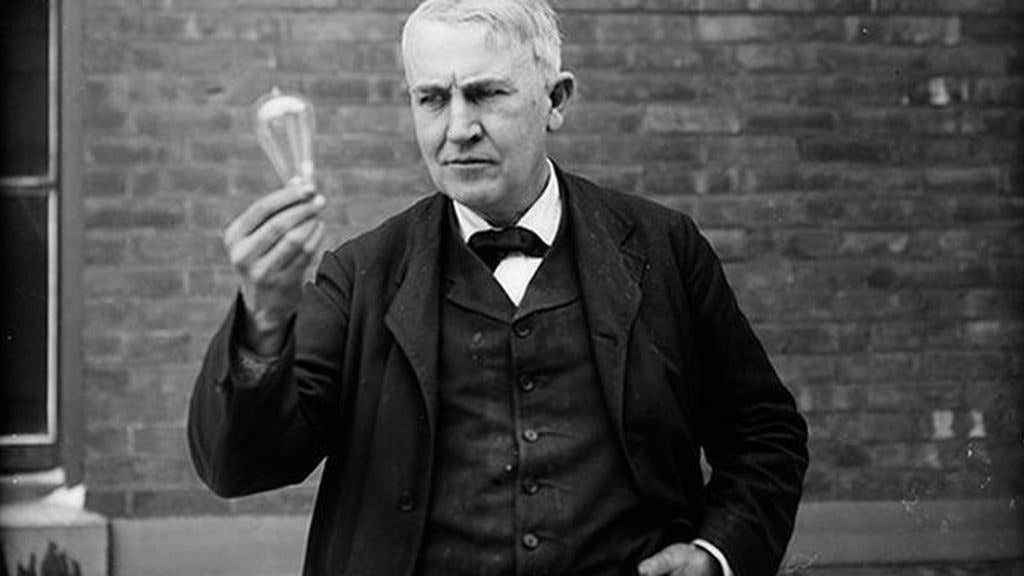

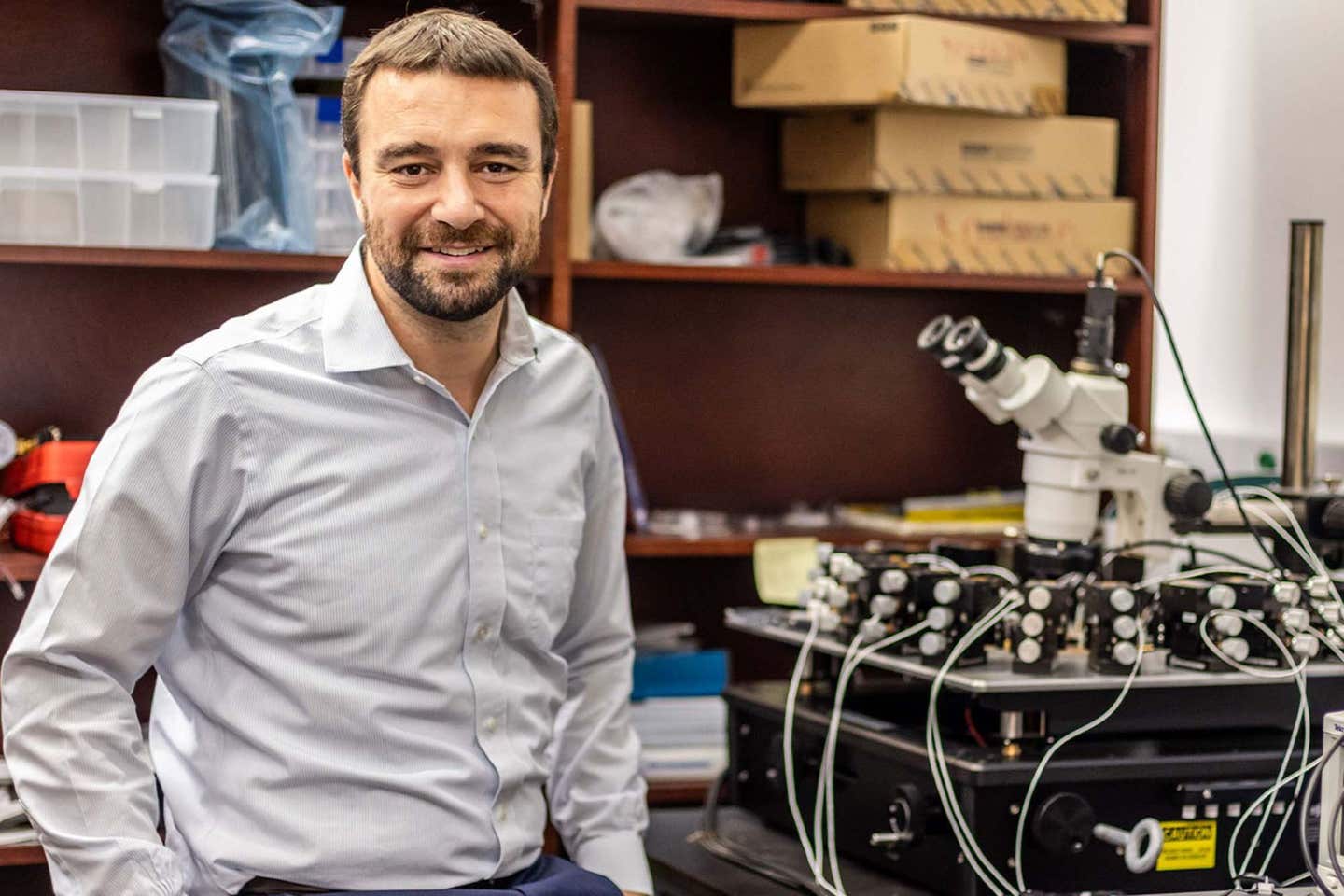

Dr. Joseph S. Friedman and his colleagues created a computer prototype that learns patterns and makes predictions using fewer training computations than conventional artificial intelligence systems. (CREDIT: University of Texas at Dallas)

In the future, a new type of computer may be able to learn much like you do—by experience rather than endless repetition or instruction. Researchers at the University of Texas at Dallas, along with Everspin Technologies and Texas Instruments, have made significant strides toward that future with these magnetic materials being taught to behave like neurons and synapses do in the brain to learn and adapt.

The research was premised on the concept of neuromorphic computing, which re-envisions computers to think more like humans. Neuromorphic computing systems rely on brain-inspired hardware that performs memory and processing together, rather than requiring massive labeled datasets and expensive training computations. Much like your brain links memory and thought together so that it can recognize a face or learn a new skill.

Dr. Joseph S. Friedman, associate professor of electrical and computer engineering at UT Dallas, led the work in conjunction with Dr. Sanjeev Aggarwal, president and CEO, Everspin Technologies. "Our work provides evidence of a possible new pathway for creating brain-inspired computers that acquire knowledge on their own," Friedman said. "Because neuromorphic computers do not require extensive training computations, they could enable intelligent operations in smart devices while vastly reducing energy loads."

Replacing Fragile Artificial Synapses with Magnetic Ones

Currently, the majority of computers separate memory from processing, constantly shuttling data between chips. The shuttling of data consumes significant time and energy, especially in AI-based systems. Neuromorphic computers bridge that gap by processing and storing memory in one instance, using devices that function like synapses, which are the components that link neurons together and strengthen or weaken as you learn.

Conventional neuromorphic components like memristors and phase-change memory have not demonstrated high reliability. Their resistance states can drift over time, they can fail, and they have inherent random error rates. The UT Dallas group found that they could do better, reliably, with spin-transfer torque magnetic tunnel junctions (STT-MTJs).

An MTJ is a nanoscale sandwich made up of two magnetic layers separated by an insulator. If the two magnetic layers are aligned in the same way, then electrons tunnel through the insulator more easily. A junction has two stable resistance states, similar to a binary switch. What's more interesting is that a junction can randomly switch states when subjected to voltage pulses. This stability with controlled randomness allows for a balance between reliability and creativity that mirrors the brain.

Teaching a Tiny Brain to Recognize Patterns

The researchers tested the idea with a small network of eight MTJ devices placed in a 4×2 grid. Each MTJ operated as an artificial synapse switching between high and low conductance states. They trained the network to recognize small black-and-white images consisting of four pixels. To teach the network, the researchers compared the paths of electric currents flowing through each MTJ, and the network learned to recognize patterns, similar to how neurons recognize approachable shapes.

The tiny brain was able to classify all 16 combinations of the images, and more importantly, it classified reliably without stability issues of analog devices. The feasibility of MTJ synapses performing inference tasks, making decisions based on prior learning, close to biological neurons, was demonstrated. Self-guided Learning

The next stage was even more ambitious: to enable the network to learn independently. The researchers turned to the principle of Hebbian learning, introduced by the psychologist Donald Hebb in 1949, often represented as "cells that fire together, wire together."

When two neurons are both activated simultaneously, the link between them becomes stronger. The team at UT Dallas translated this concept into electronics. If both an input neuron and an output neuron fired together, the magnetic junction between them became more conductive. If only one neuron fired, the junction became less conductive.

In their experiments, the researchers allowed the MTJ network to self-organize without any labels or further external control. After only nine learning cycles, each of the artificial neurons had become specialized in recognizing either one or the other of two patterns. The randomness introduced by the stochastic spin-transfer torque in effect allowed the system to explore different options before settling down and making meaningful connections.

Scaling Up to See the Bigger Picture

Encouraged by these findings, the team simulated a much larger version of their network, using the MNIST dataset, an established test for handwriting recognition. In the simulation, the 784 input neurons represented the pixel position of each of the 28×28 images, and the output layer now contained tens of thousands of neurons instead of hundreds.

As the training process continued, the network began to organize itself. Different neurons would tune to different digits and occasionally switch their tuning mid-training as they were competing for specialization. Upon evaluation, the MTJ-based system demonstrated accuracy levels of up to 90 percent in accurately classifying handwritten numbers, equaling the performance of systems created with less reliable analog components.

Perhaps equally exciting was its efficiency. Every MTJ performs both storage and computation in the memory, reducing the energy lost in moving data from chip to chip. The researchers estimated that such a processor would have over 600 trillion operations per second per watt—over six times more efficient than existing memristor systems and thousands of times more efficient than standard graphics processor systems.

A Step Toward Machines with Brain-Like Performance

For Friedman and his team, the implications extend well beyond the laboratory. Because the MTJ arrays can be fabricated on standard chip processes, future processors could include millions of magnetic synapses with traditional circuitry. These chips could enable autonomous robots, medical monitoring devices, or small edge devices to learn from ongoing experience, without the use of a traditional server or large data center.

By addressing binary precision with the elegant uncertainty of magnetic switching, the team has provided a hardware model for how biological brains have found a balance between structure and flexibility. Their research, published in Communications Engineering, is a major milestone in an ongoing effort that spans decades, focusing on empowering machines to actually learn rather than simply follow instructions.

Implications of the Research for the Real World

This discovery suggests a new generation of less energy-intensive computers that could make artificial intelligence more sustainable and personal. Detected based on local experience, devices using MTJ-based neuromorphic processors would lessen the need to rely on huge cloud-based infrastructures and drastically reduce the power needed for AI training.

In real terms, it could provide us with smarter wearables that respond to their users, medical sensors that immediately detect health changes, and autonomous systems that think independently while continuing to reduce power consumption.

Additionally, it opens the door to low-cost, on-device AI that can learn and operate without constant connectivity, bringing intelligent technology closer to human thought processes that evolve and respond to the world around them.

Research findings are available online in the journal Communications Engineering.

Related Stories

- Scientists create the world's first neuromorphic supercomputer to simulate the human brain

- 16 lab-grown brains run world’s first ‘living computer’ - use 1 million times less power than a microchip

- New brain-computer interface turns silent thoughts into words

Like these kind of feel good stories? Get The Brighter Side of News' newsletter.

Shy Cohen

Writer