Does AI have a gender? Your answer is surprisingly important

Researchers asked how people behave when an AI partner is given a gender and whether gender expectations spill over into interactions with machines.

Edited By: Joseph Shavit

Edited By: Joseph Shavit

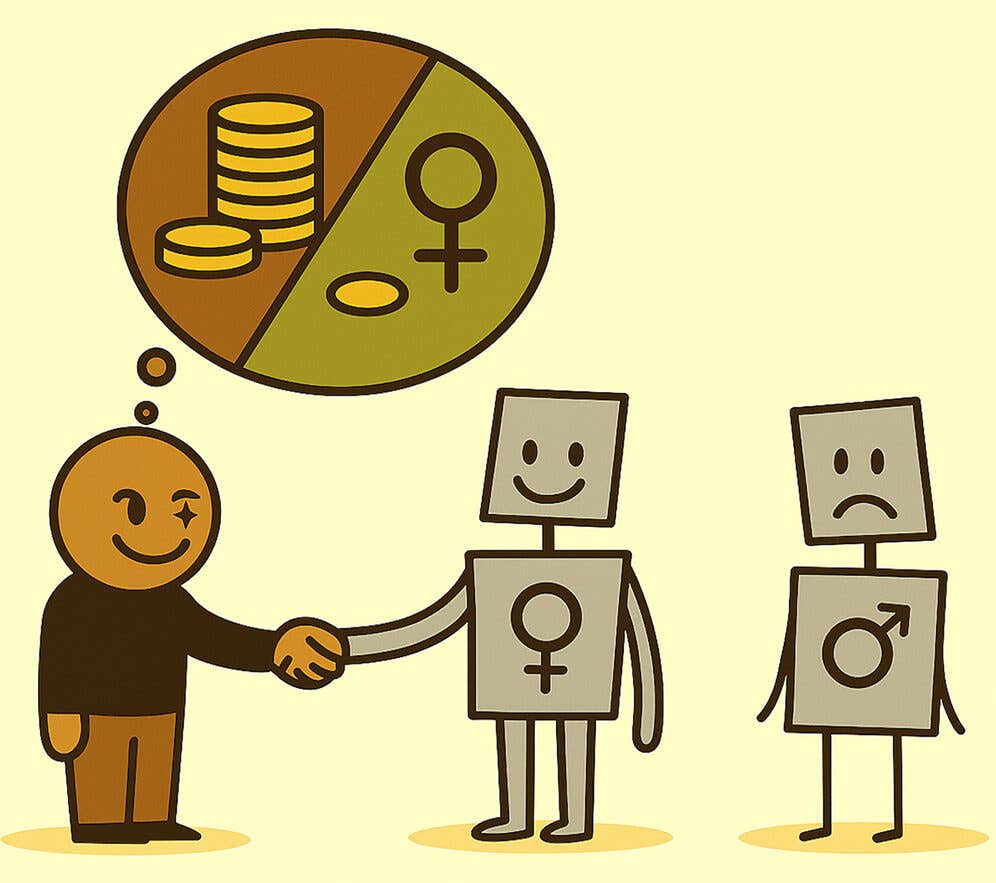

A new study shows that people bring deep-rooted gender expectations into interactions with AI. (CREDIT: iScience)

Cooperation guides much of daily life. You feel it when a stranger waves you into a crowded lane or when communities pull together after a storm. At its core, cooperation asks you to trade a little personal benefit for the greater good and trust that others will not take advantage of your goodwill. As artificial intelligence begins to share that social space, a new question comes into focus. Will people extend that same trust to AI, especially when there is something real to gain or lose?

A recent study from Trinity College Dublin and Ludwig Maximilians Universität Munich explored that question with an unexpected twist. It asked how people behave when an AI partner is given a gender and whether long-standing gender expectations spill over into interactions with machines. The research, published in iScience, offers an early look at how design decisions such as labels, voices, and names shape cooperation between humans and automated agents.

The Experiment Behind the Findings

To explore these patterns, the research team relied on a classic tool from game theory, the Prisoner’s Dilemma. In this game, two players decide whether to cooperate or defect. When both cooperate, each earns 70 points. When both defect, the reward falls to 30. But if one player defects while expecting cooperation, that person gains 100 points while the other loses out. It is a simple setup that mirrors real life. Trust pays off only when it goes both ways.

More than 400 adults living in the United Kingdom took part in the study through the Prolific platform in July 2023. Each person completed ten rounds of the dilemma. Their partners were not real people. Instead, partner decisions were randomly generated to make sure only the participant’s own choices drove results. Every partner carried two labels, one describing them as either a human or an AI and another describing a gender identity. Options included male, female, non-binary or fluid, and no gender.

After each round, participants guessed whether their partner would cooperate or defect. These expectations allowed researchers to categorize each choice into four motives. Some choices reflected optimism about joint benefit. Others showed attempts to take advantage of a trusting partner. Some showed fear of betrayal. A few revealed unconditional cooperation even when people expected disappointment.

Cooperation Levels Stayed Steady but Motives Shifted

At first glance, the results might seem uneventful. People cooperated with human partners almost as often as they did with AI partners. Cooperation rates were 50.7 percent with humans and 47.8 percent with AI, a small difference that was not statistically meaningful. But the reasons behind these choices differed in striking ways.

When people defected against human partners, most of those decisions came from distrust. More than 70 percent reflected pessimism about the partner’s intentions. Only about 30 percent were clear attempts to exploit someone expected to cooperate.

Against AI partners, the pattern changed. Distrust still played a role, but it fell to 58.9 percent. Exploitation rose to more than 41 percent. A highly significant statistical test confirmed that people were more willing to take advantage of AI than of humans. In other words, people might cooperate with AI as often as they do with humans, but when they choose not to, they are more likely to act selfishly.

Gender Labels Shaped Trust and Willingness to Cooperate

The study then isolated the effect of gender labels. Participants cooperated the most with partners labeled female, at a rate of 58.6 percent. They cooperated the least with male partners, at only 39.7 percent. Non-binary and no-gender partners fell between the two.

Motives again revealed deeper differences. When cooperating with female partners, people did so with confidence. More than 90 percent of those choices came from the belief that the other side would cooperate as well. With male partners, that figure dropped to 73 percent. Defection toward male partners reflected strong distrust. More than 80 percent of those choices were tied to expectations of mutual defection. When defecting against female partners, motives were split. Nearly half were attempts to exploit an expected cooperator.

Overall, behavior toward male partners stood apart. Female, non-binary, and no-gender partners received similar levels of goodwill and trust. Male partners received the least.

When AI Carries a Gender, Bias Follows

Once researchers separated human from AI partners, the same gender patterns remained. People cooperated most with female-labeled partners and least with male-labeled ones whether those partners were human or AI. Cooperation with female humans reached 62.2 percent but dropped to 55 percent for female AI. Although these gaps were meaningful, the broader trend did not differ much between humans and bots.

Yet the motives shifted again. People were most likely to exploit female AI partners and most likely to distrust male human partners. The study found that gendered expectations from daily life do not disappear when the interaction involves a machine. Instead, the same patterns appear and sometimes grow stronger.

How Participant Gender Shaped Behavior

The gender of the participants themselves also mattered. Female participants cooperated more often than male participants with both humans and AI. They also responded more sharply to partner gender.

Cooperation with female human partners reached 69 percent for female participants but fell to 38 percent when the partner was male. Male participants showed no similar pattern. These differences were present with AI partners as well, though not as strong.

What This Means for AI Design

The study highlights a growing challenge as AI becomes more common in workplaces, homes, and social spaces. Once designers give AI systems human-like traits such as gender, they also introduce human-like biases. Sepideh Bazazi, the study’s first author, noted that these tendencies matter for trust and fairness. Her team argues that designers should take these effects seriously to avoid reinforcing discrimination or inviting misuse.

Co-author Taha Yasseri added that even a simple label can shape cooperation. Jurgis Karpus described the tradeoff clearly. Human-like cues might help build rapport with AI, but they also open the door to old patterns that society is still trying to address.

The study has limits. It used one-time interactions rather than long-term relationships and included only UK participants. Cultural expectations and social norms may shape results differently elsewhere. Still, the findings raise important questions about how design choices guide moral behavior toward automated systems.

Research findings are available online in the journal iScience.

Related Stories

- Artificial intelligence understands feelings better than people, study finds

- Nations race to train workers for the age of artificial intelligence

- Artificial intelligence is learning to understand people in surprising new ways

Like these kind of feel good stories? Get The Brighter Side of News' newsletter.

Shy Cohen

Writer