New memory structure helps AI models think longer and faster without using more power

A new memory compression method helps AI models reason more deeply while using less power, boosting performance in maths, science, and coding.

Edited By: Joseph Shavit

Edited By: Joseph Shavit

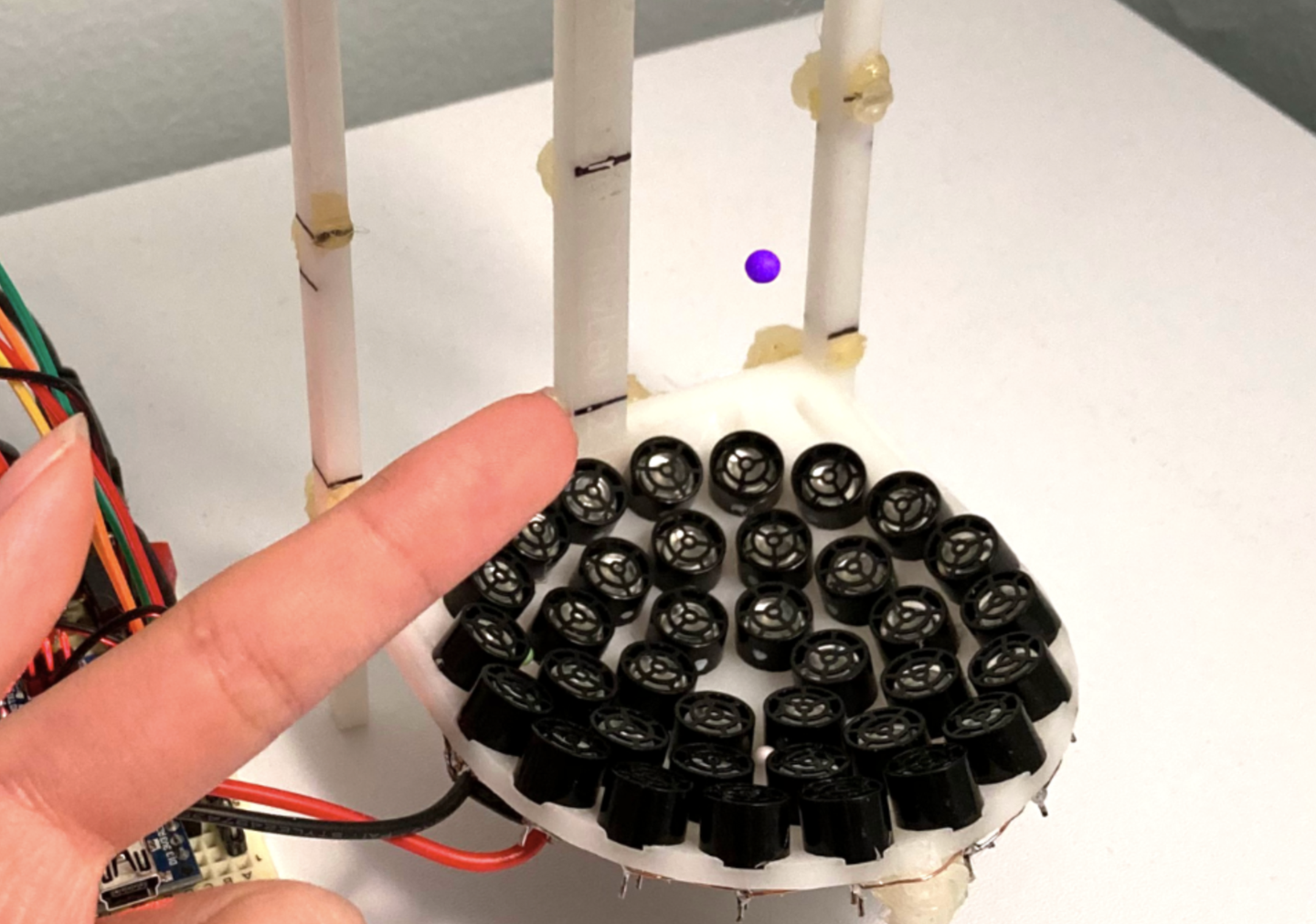

Researchers show how AI models can think deeper and faster by compressing memory without losing accuracy. (CREDIT: Shutterstock)

Researchers from the University of Edinburgh and NVIDIA have introduced a new method that helps large language models reason more deeply without increasing their size or energy use. The work, presented at the NeurIPS artificial intelligence conference, tackles a core technical barrier that limits how far today’s AI systems can “think” when solving complex problems in maths, science, and coding.

At the center of the study is a memory structure known as the key–value cache, often shortened to the KV cache. This cache stores information generated as a model produces each new word or token. Every additional reasoning step expands the cache, and once it grows too large, performance slows sharply. Even powerful GPUs struggle when they must repeatedly retrieve large amounts of stored data during inference, the stage when a model responds to a prompt.

Rather than forcing models to shorten their reasoning or rely on rough memory cuts, the research team developed a new approach that allows models to manage memory more intelligently. Their method, called Dynamic Memory Sparsification, or DMS, enables models to compress their memory by up to eight times while maintaining, or even improving, accuracy.

Dr. Edoardo Ponti, a GAIL Fellow and lecturer in natural language processing at the University of Edinburgh’s School of Informatics, said the method changes what models can do within the same time limits. “In a nutshell, our models can reason faster but with the same quality. Hence, for an equivalent time budget for reasoning, they can explore more and longer reasoning threads. This improves their ability to solve complex problems in maths, science, and coding,” he said.

Why memory slows down modern AI reasoning

Inference-time scaling has become a major focus in AI research. When a model faces a difficult question, it often works through a problem step by step, producing long chains of intermediate reasoning. Each step adds new entries to the KV cache. As these entries pile up, the model spends more time fetching stored data than generating new insights.

Previous attempts to address this bottleneck focused on aggressive memory trimming. Some methods removed tokens based on fixed rules, which reduced memory use but often damaged accuracy. Other approaches learned which parts of memory to keep, but required long retraining runs that made them expensive and impractical.

The Edinburgh and NVIDIA team aimed to find a balance. They wanted a system that learned what to keep, avoided sudden information loss, and could be added to existing models without massive retraining.

How Dynamic Memory Sparsification works

DMS allows a model to decide which tokens are no longer essential, but delays their removal. Instead of deleting information the moment it is marked for eviction, the model keeps it visible for a short window of time. This delay gives the model a chance to transfer useful details into other retained tokens.

The system relies on a learned mask that gradually reduces a token’s influence as the sequence grows. Tests showed that immediate deletion caused sharp drops in reasoning quality. Delayed eviction, by contrast, preserved stable performance even when memory was compressed to one quarter or one eighth of its original size.

Another key advantage is efficiency during training. The researchers were able to retrofit large models using about 1,000 training steps to reach eight-times compression. Earlier methods often needed tens of thousands of steps and still struggled at high compression levels. This makes DMS practical for a wide range of existing language models.

Stronger results on demanding benchmarks

To test whether memory compression actually helps reasoning, the team evaluated DMS on demanding benchmarks that require multi-step thinking. These included AIME 2024 and MATH-500 for mathematics, GPQA Diamond for advanced science questions, and LiveCodeBench for coding.

Across these tests, models using DMS achieved higher accuracy under the same compute limits. On AIME 2024, a qualifying exam for the United States Mathematical Olympiad, compressed models scored about twelve points higher on average than uncompressed models using the same number of memory reads. On GPQA Diamond, which includes biology, chemistry, and physics questions written by PhD-level experts, scores improved by more than eight points. LiveCodeBench results showed gains of roughly ten points.

The team tested multiple model sizes, including 1.5 billion, 7 billion, and 32 billion parameter versions of Qwen, along with Llama models. In most cases, DMS outperformed both heuristic memory cuts and other learned sparsification methods.

Faster responses and lower energy costs

"Beyond accuracy, our team measured real-world performance. At short context lengths, compressed and uncompressed models behaved similarly. As context grew longer, however, compressed models avoided sharp increases in latency. This allowed them to handle deeper reasoning without overwhelming GPU memory," Dr. Ponti told The Brighter Side of News.

"Throughput also improved. When models were run at the largest batch sizes that fit into memory, DMS-enabled systems processed far more queries per minute. For large-scale deployments, this translates into lower costs and reduced power use per task," he continued.

"The same method can also be used differently. Instead of deeper reasoning for one query, compressed memory can allow a model to respond to more users at once. This reduces energy use per response, an important consideration as AI systems scale," he concluded.

Reliability beyond complex reasoning

The team also examined whether memory compression harmed everyday tasks. Across general knowledge, conversation, instruction following, and coding benchmarks, DMS models closely matched the performance of their original counterparts. In some coding tasks, they even performed slightly better.

The study also revealed patterns in how models use memory. Early layers tended to preserve more detailed information, while later layers compressed more aggressively. Compression also increased as sequences grew longer, suggesting models rely less on early tokens once enough context is established.

Practical Implications of the Research

The findings suggest a clear path toward more efficient and capable AI systems. By allowing models to reason more deeply without extra hardware, DMS can improve problem-solving in fields that rely on complex analysis, including science, engineering, and software development. The method also supports sustainability goals by reducing energy use per task.

In practical terms, this approach could benefit AI systems running on devices with limited or slow memory, such as smart home devices and wearable technology. It may also allow data centers to handle higher workloads without increasing power demands.

As Dr. Ponti and his team continue their work through the European Research Council-funded AToM-FM project, the focus will remain on understanding how large AI systems store, forget, and reuse information more efficiently.

Research findings are available online in the journal arXiv.

Related Stories

- Nations race to train workers for the age of artificial intelligence

- Artificial intelligence is learning to understand people in surprising new ways

- The future of human grief in the age of artificial intelligence

Like these kind of feel good stories? Get The Brighter Side of News' newsletter.

Shy Cohen

Writer