Security researchers develop first-ever functional defense against cyberattacks on AI models

A new training strategy makes neural networks far harder to steal, blocking cryptanalytic extraction attacks while preserving nearly all accuracy.

Edited By: Joseph Shavit

Edited By: Joseph Shavit

Researchers now warn that the most advanced of these cyberattacks, called cryptanalytic extraction, can rebuild a model by asking it thousands of carefully chosen questions. (CREDIT: Wikimedia / CC BY-SA 4.0)

Neural networks shape many tools you rely on every day, from photo filters to medical software. Building these systems is costly. They need enormous computing power, long training cycles, and huge collections of curated data. Companies treat the finished models as valuable property because they represent years of research.

However, many of these systems are offered through online services where anyone can send in a question and receive a prediction. That convenience creates a quiet but growing danger. Attackers are learning to steal the internal parameters that make these models run.

Researchers now warn that the most advanced of these attacks, called cryptanalytic extraction, can rebuild a model by asking it thousands of carefully chosen questions. Each answer helps reveal tiny clues about the model’s internal structure. Over time, those clues form a detailed map that exposes the model’s weights and biases. These attacks work surprisingly well when used on neural networks that rely on ReLU activation functions. Because these networks behave like piecewise linear systems, attackers can hunt for points where a neuron’s output flips between active and inactive and use those moments to uncover the neuron’s signature.

How the Attacks Work

The attack moves through the network, layer by layer. It searches for “critical points” where the input to a neuron is exactly zero. That moment marks the line between activation states. By nudging an input slightly in one direction and then in the opposite direction, an attacker can study how the overall output shifts. Those tiny shifts help cancel out the influence of other neurons and reveal a pattern tied to the target neuron alone.

Early methods could only recover partial information, but newer techniques can figure out both the size and the direction of the weights. Some even work using nothing more than the model’s predicted labels. All rely on the same core assumption. Neurons in a given layer behave differently enough that their signals can be separated. When that is true, the attack can cluster each neuron’s critical points and rebuild the entire network with surprising accuracy.

Turning Similarity Into Security

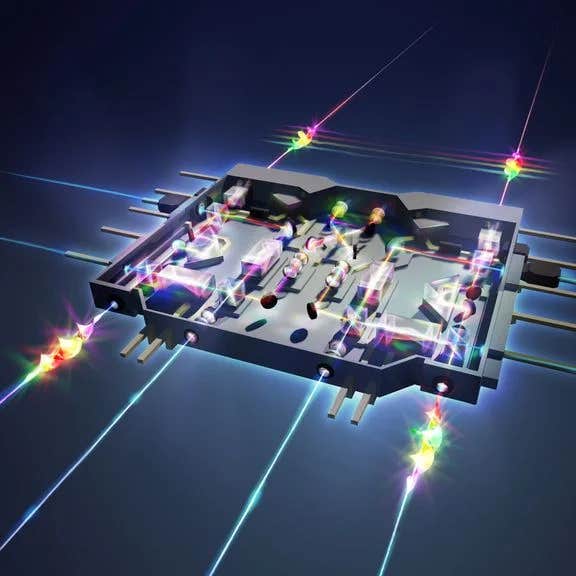

A new study from North Carolina State University reveals a defense that challenges the very assumption these attacks depend on. Instead of hiding information after training ends, the method changes how the neural network learns in the first place. The training process nudges neurons toward each other so that they develop similar sets of parameters. When neurons become harder to tell apart, the attacker loses the ability to isolate each one’s behavior.

If two neurons share identical weights, they create the same boundaries in the input space. That means they switch between active and inactive states at the same time. Even when they are only nearly identical, the gap between their activation points becomes small. An attacker looking for clear lines to probe now finds only blurred boundaries. The signatures mix, and the extraction process stalls.

The study’s authors created a mathematical framework to describe how this works. It predicts how likely it is that an input lands in the narrow region where two neurons behave differently. As neuron parameters grow closer together, that region shrinks. The attack’s chance of success drops along with it.

Training Models With Built-In Protection

To take advantage of this insight, the researchers introduced a new training method called extraction-aware learning. It adds a second term to the training loss. The first term still focuses on the model’s regular task, such as predicting digits or classifying images. The second term penalizes neurons that drift too far apart. This encourages the network to develop clusters of similar neurons that maintain performance but resist extraction.

The similarity term examines all pairs of neurons in each layer. For each pair, it calculates how different their weights are and adds that difference to the similarity loss. A single factor controls how strongly the model pushes neurons together. If the factor is too high, accuracy will suffer. If it is too low, the protection may not be strong enough. The study explores ways to tune this, including focusing only on the first layer. Because extraction attacks move layer by layer, corrupting the first layer can throw off the rest of the process.

An interesting option is to protect only a random subset of neuron pairs. This reduces training cost while still confusing the attack. Importantly, all the extra work happens only during training. Once deployed, the model runs normally without extra memory use or slower runtimes.

Tested Against Real Attacks

The team tested this defense on neural networks that previous studies had broken in just a few hours. One of the clearest results comes from a model trained on the MNIST digit dataset with two small hidden layers. Earlier work showed that attackers could fully reconstruct this model in around three and a half hours. After applying the new defense, extraction attempts failed even after running for two full days. Accuracy dropped by less than one percentage point.

Some defended models even performed slightly better than before. In one case, accuracy rose by 0.43 percentage points while still resisting extraction. The study found that across all tests, models could be secured with almost no loss in performance.

The team also checked how real tools behaved when facing these defended networks. Attacks normally group critical points into clean clusters. In protected networks, those clusters never form. The attacker is forced to collect more data, try new angles, and run more tests, but the neurons still blur together. The attack collapses under its own complexity.

There are limits. The work focuses on fully connected networks that use piecewise linear activations. The same style of attack has not yet been shown to work on convolutional models or large language systems. Still, the new technique is not tied to any specific architecture and could adapt as attacks shift.

Practical Implications of the Research

These findings offer a realistic path toward protecting commercial AI services. Companies can train models that resist mathematical extraction without slowing down user requests or adding new hardware.

This may help safeguard sensitive systems such as medical tools, financial models, and consumer AI apps. It may also reduce the risk of stolen models being reused in harmful or deceptive ways.

The theoretical framework created by the researchers helps developers measure how secure their models are before deploying them, which could become an important step in future AI development cycles.

Research findings are available online in the journal arXiv.

Related Stories

- Nations race to train workers for the age of artificial intelligence

- Artificial intelligence is learning to understand people in surprising new ways

- A new kind of coach: How artificial intelligence is helping people prevent diabetes

Like these kind of feel good stories? Get The Brighter Side of News' newsletter.

Shy Cohen

Writer