UCLA scientists use light to create energy-efficient generative AI models

UCLA engineers create an AI model that uses light to generate images, offering faster, greener, and more sustainable computing.

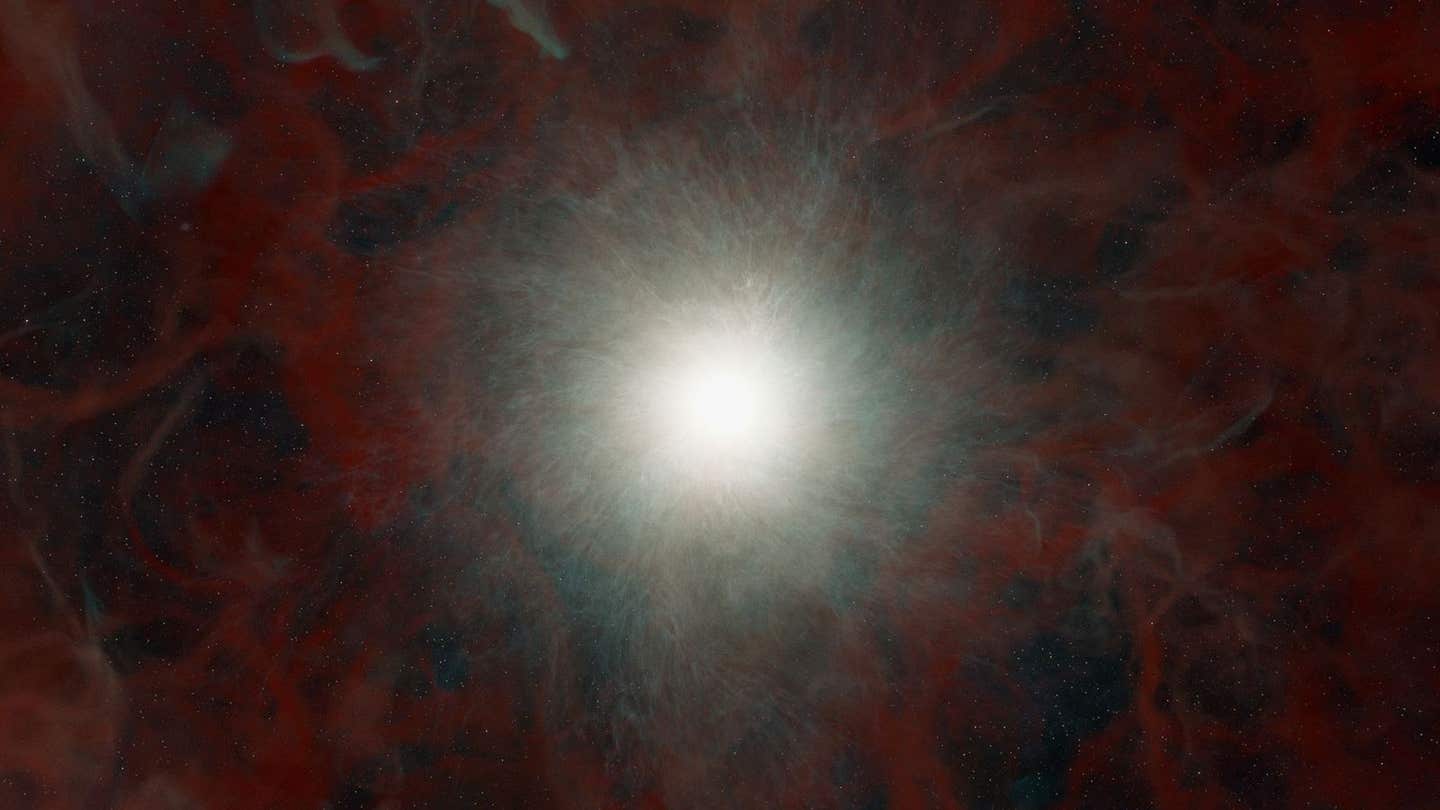

A UCLA team has built an AI system that uses light to generate images. (CREDIT: Nature)

Artificial intelligence has dazzled the world with its ability to create pictures, words, and even music from scratch. But behind the magic lies a hidden cost. Training and running today’s most advanced generative AI systems consumes massive amounts of electricity, creates significant carbon emissions, and uses up vast amounts of water to cool sprawling data centers. The question is whether this technology, for all its wonders, can remain sustainable as demand grows.

A team of researchers at the UCLA Samueli School of Engineering believes they may have found an answer—one that trades the energy-hungry churn of supercomputers for the elegance and speed of light. Their new optical generative model uses photonics to create images in ways that could dramatically reduce the environmental footprint of AI while keeping performance high.

Instead of relying on billions of digital calculations to piece together a picture, this approach lets light itself handle most of the work. “Our work shows that optics can be harnessed to perform generative AI tasks at scale,” said Aydogan Ozcan, senior author of the study. “By eliminating the need for heavy, iterative digital computation during image inference, optical generative models like ours open the door to snapshot, energy-efficient AI systems that could transform everyday technologies.”

How It Works

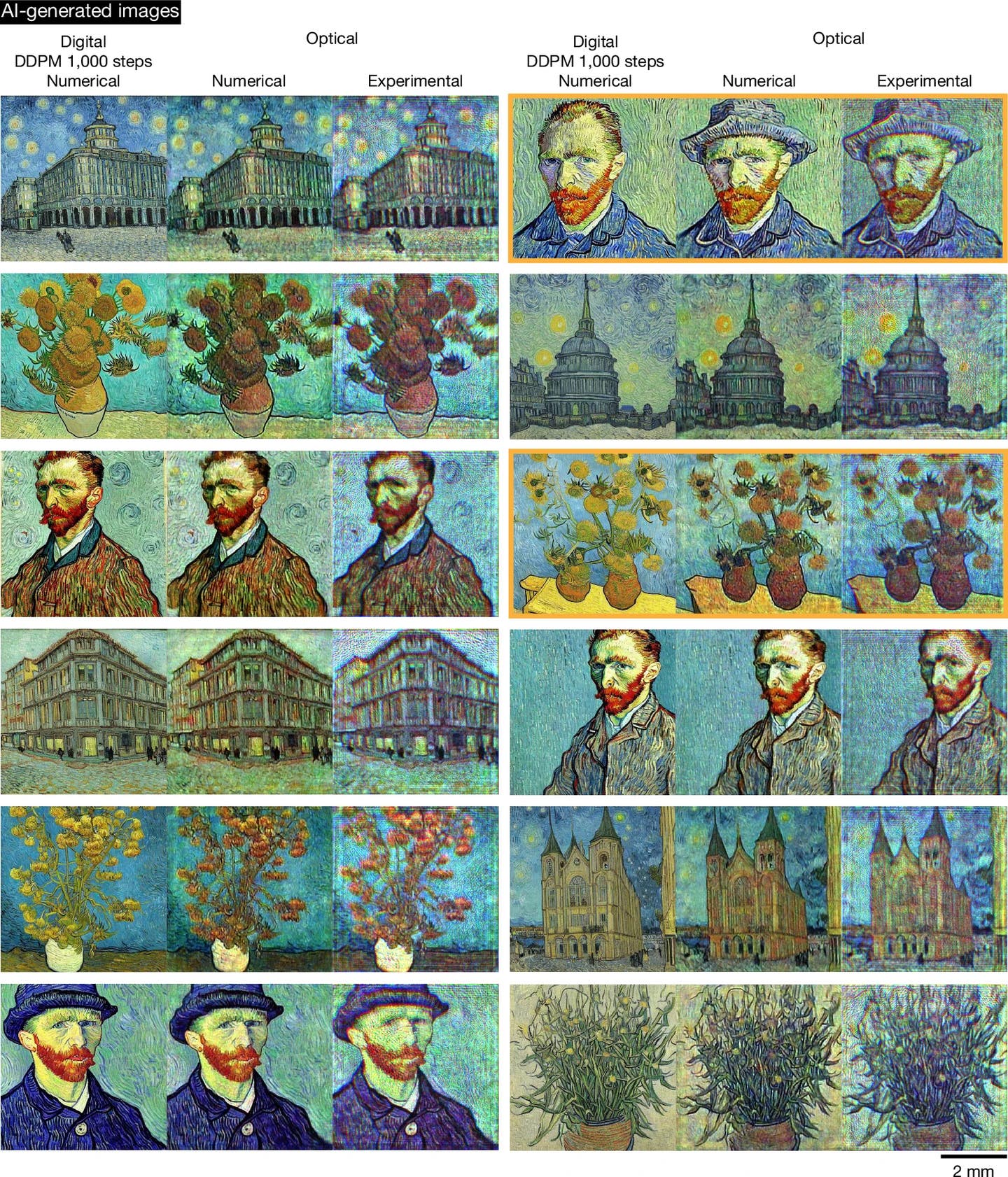

At the heart of the setup is a simple yet ingenious partnership between a small digital encoder and an optical decoder. The digital part transforms random noise into a “phase map,” which is then displayed on a spatial light modulator. That map tells the light how to bend, scatter, or shift as it passes through the system. Once the light moves through a specially designed optical decoder, an image appears on a sensor—whether it’s a handwritten number, a butterfly, or a portrait in the style of Vincent van Gogh.

Because the heavy lifting is done by the physics of light rather than by electronic circuits, this process happens astonishingly fast. The optical stage itself takes less than a nanosecond, with the only real bottleneck being how quickly the light modulator can refresh its pattern. The researchers call this “snapshot generation,” because a complete image is created in a single burst of light.

The team also built an iterative version of their system that mimics the way popular digital diffusion models refine images step by step. This approach avoids problems like “mode collapse,” where models get stuck generating the same few patterns over and over. The optical iterative models produced more diverse results without giving up efficiency.

Putting the Model to the Test

The researchers didn’t stop at theory. They built a working optical system and put it through a series of experiments across well-known datasets. The model generated black-and-white images of handwritten digits from the MNIST dataset, clothing items from Fashion-MNIST, and more complex pictures like butterflies and human faces.

They measured performance using two key metrics: Inception Score, which tracks diversity and quality, and Fréchet Inception Distance, which measures how close generated images are to real ones. For simpler datasets, the optical models performed competitively against digital ones. In one experiment, a classifier trained only on optically generated digits still reached 99.18% accuracy—just 0.4% less than training on the real thing.

In color experiments, the team used three different wavelengths of light for red, green, and blue. This allowed them to generate full-color images of butterflies and faces. Failures, where noise overwhelmed the signal, were rare—only about 3% for butterflies and 7% for faces.

Another important measurement was diffraction efficiency, or how much of the input light contributes to the final image. Using a single-layer optical decoder, they reached about 42% efficiency. Adding more decoding layers raised that number to about 50% while maintaining solid image quality. In other words, half the incoming light was put to work in creating the picture.

Challenges Along the Way

As with any new technology, the optical model faces real-world hurdles. Precision matters: small misalignments, imperfections in the optics, and limits in how finely the light phase can be controlled all affect results. To get around these issues, the team trained their models with hardware constraints in mind, making sure that what worked in theory would also succeed in practice.

They also suggested future designs that could replace bulky spatial light modulators with thin, passive optical surfaces created using nanofabrication. These could make the system cheaper, more compact, and easier to integrate into everyday devices.

Another intriguing possibility is parallel image generation, where multiple patterns are created at once using different wavelengths or spatial channels. The researchers also see potential in generating 3D images, a feature that could bring new life to augmented and virtual reality.

Toward Sustainable AI

What makes this development especially exciting is its promise to reduce the environmental burden of AI. Traditional generative systems demand supercomputers running for hours, if not days, to create high-quality results. These machines not only consume enormous amounts of power but also require water-intensive cooling systems.

By shifting the generative process into the optical domain, the UCLA team’s method sidesteps much of that demand. In one demonstration, their optical system recreated Van Gogh-style artwork in a single step per color channel, compared with 1,000 steps needed by a digital diffusion model. The images were visually comparable, yet the energy cost was only a fraction of the traditional method.

The team also points out that their model can add layers of security. Different light wavelengths can encode different patterns that only a matched decoder can reconstruct. This physical “key-lock” mechanism could secure communications, protect against counterfeiting, and personalize digital content in ways that are difficult to hack.

Practical Implications of the Research

The future possibilities of light-based AI extend beyond efficiency. Compact, low-power optical models could be embedded in smart glasses, augmented reality headsets, or mobile devices. They could enable real-time AI without draining batteries or requiring constant cloud connections.

Beyond consumer gadgets, the approach has clear potential in biomedical imaging, diagnostics, and secure data transmission. Optical models could help hospitals analyze data faster with less energy, or allow researchers to run large experiments without the environmental costs of massive computer clusters.

Most importantly, this technology shows a path toward scaling AI in a way that doesn’t come at the expense of the planet. By letting light take over some of the thinking, the research points toward a future where powerful AI and sustainability go hand in hand.

Research findings are available online in the journal Nature.

Related Stories

- Scientists build generative AI tool to fast-track quantum material discoveries

- Generative AI designed 5 new battery materials - more powerful and sustainable than lithium

- The Hidden Environmental and Social Impacts of Generative AI

Like these kind of feel good stories? Get The Brighter Side of News' newsletter.

Shy Cohen

Writer